Word count: 3035. Estimated reading time: 15 minutes.

- Summary:

- The Raspberry Pi 5 looks like a great cheap colocated server solution. But only because providers special case a Raspberry Pi with extra low prices. If they only special cased anything under twenty watts with the same price, I’d be sending them an Intel N97 mini PC and avoid all this testing and hassle with the Raspberry Pi.

Sunday 15 December 2024: 14:39.

- Summary:

- The Raspberry Pi 5 looks like a great cheap colocated server solution. But only because providers special case a Raspberry Pi with extra low prices. If they only special cased anything under twenty watts with the same price, I’d be sending them an Intel N97 mini PC and avoid all this testing and hassle with the Raspberry Pi.

My current public internet node is dedi6.nedprod.com which as you might guess by the number six, it is the sixth iteration of said infrastructure. I have previously talked about the history of my dedicated servers before back in 2018 when I was about to build out my fifth generation server which was based on an eight core Intel Atom box with 8Gb of ECC RAM and a 128Gb SSD for €15/month. That was a good server for the money, it ran replicating offsite ZFS and it sucked less than your usual Atom server due to the eight cores and much faster clock speed. You even got a best effort 1 Gbit network connection, albeit peering was awful.

Alas two years later in July 2020 that server never came back after a reboot, and nedprod.com had a two week outage due to all data being lost. Turns out that particular server hardware is well known to die with a reboot, which put me off getting another. I did at least get to discover if my backup strategies were fit for purpose, and I discovered as that post related at the time that there was room for considerable improvement, which has since been implemented and I think I would never have a two week outage ever again. The sixth generation server infrastructure thus resulted, which consisted originally of two separate dual core Intel Atom servers with 4Gb of RAM and a 128Gb SSD each ZFS replicating to the other and to home every few minutes. They originally cost €10 per month for the two of them, which has since increased to €12 per month. As that post relates, they’re so slow that they can’t use all of a 1Gbit NIC, I reckoned at the time they could use about 80% of it if the file were cached in RAM and about 59% of it if the file had to be loaded in. It didn’t take long for me to drop the two server idea and just have Cloudflare free tier cache the site served from one of the nodes instead. And, for the money and given how very slow those servers are, they’ve done okay.

However, technology marches on, and I have noticed that as the software stack gets fatter over time, that 4Gb of RAM is becoming ever less realistic. It’s happening slowly enough I don’t have to rush a replacement, but it is happening and at some point they’re going to keel over. 4Gb of RAM just isn’t enough for a full Mailcow Docker stack with ZFS any more, and the SATA SSD those nodes have are slow enough that if a spike in memory were to occur, the server would just grind to a halt and I would be out of time to replace it.

The state of cheap dedicated servers in 2024

Prices have definitely risen at the bottom end. I had been hoping for last Black Friday I could snag a cheap deal, but stock was noticeably thin on the ground and I didn’t have the time to be sitting there pulsing refresh until stock appeared. So I missed out. Even then, most Black Friday deals were noticeably not in Europe, which is where I want my server for latency, and the deals weren’t particularly discounted even then.

Writing end of 2024, here is about as cheap as it gets for a genuine dedicated server with min 8Gb of RAM and a SSD in a European datacentre, with an IPv4 address:

- €14.50 ex VAT/month Oneprovider

- Four core Intel Atom 2.4Ghz with 8 Gb of 2133 RAM, 1x 120 Gb SATA SSD, and 1 Gbit NIC ('fair usage unlimited' [1]). Yes, same model as dedi5!

- €17 ex VAT/month OVH Kimsufi

- Four core Intel Xeon 2.2Ghz with 32 Gb of 2133 RAM, 2x 480 Gb SATA SSDs, and 300 Mbit NIC ('fair usage unlimited' [2]).

- €19 ex VAT/month Oneprovider

- Eight core Opteron 2.3Ghz with 16 Gb of 1866 RAM, 2x 120 Gb SATA SSDs, and 1 Gbit NIC ('fair usage unlimited' [1]).

I have scoured the internet for cheaper – only dual core Intel Atoms with 4Gb RAM identical to my current servers are cheaper.

However, during my search I discovered that more than few places offer Raspberry Pi servers. You buy the Pi as a once off setup charge, then you rent space, electricity and bandwidth for as little as a fiver per month. All these places also let you send in your own Raspberry Pi. That gets interesting, because the Raspberry Pi 5 can take a proper M.2 SSD. I wouldn’t dare try running ZFS on a sdcard, it would be dead within weeks. But a proper NVMe SSD would work very well.

[1]: OneProvider will cap your NIC to 100 Mbit quite aggressively. You may get tens of seconds of ~ 1 Gbit, then it goes to 100 Mbit, then possibly lower. It appears to vary according to data centre loads, it’s not based on monthly transfer soft limits etc.

[2]: It is well known that Kimsufi servers have an unofficial soft cap of approx 4 Tb per month, after which they throttle you to 10 Mbit or less.

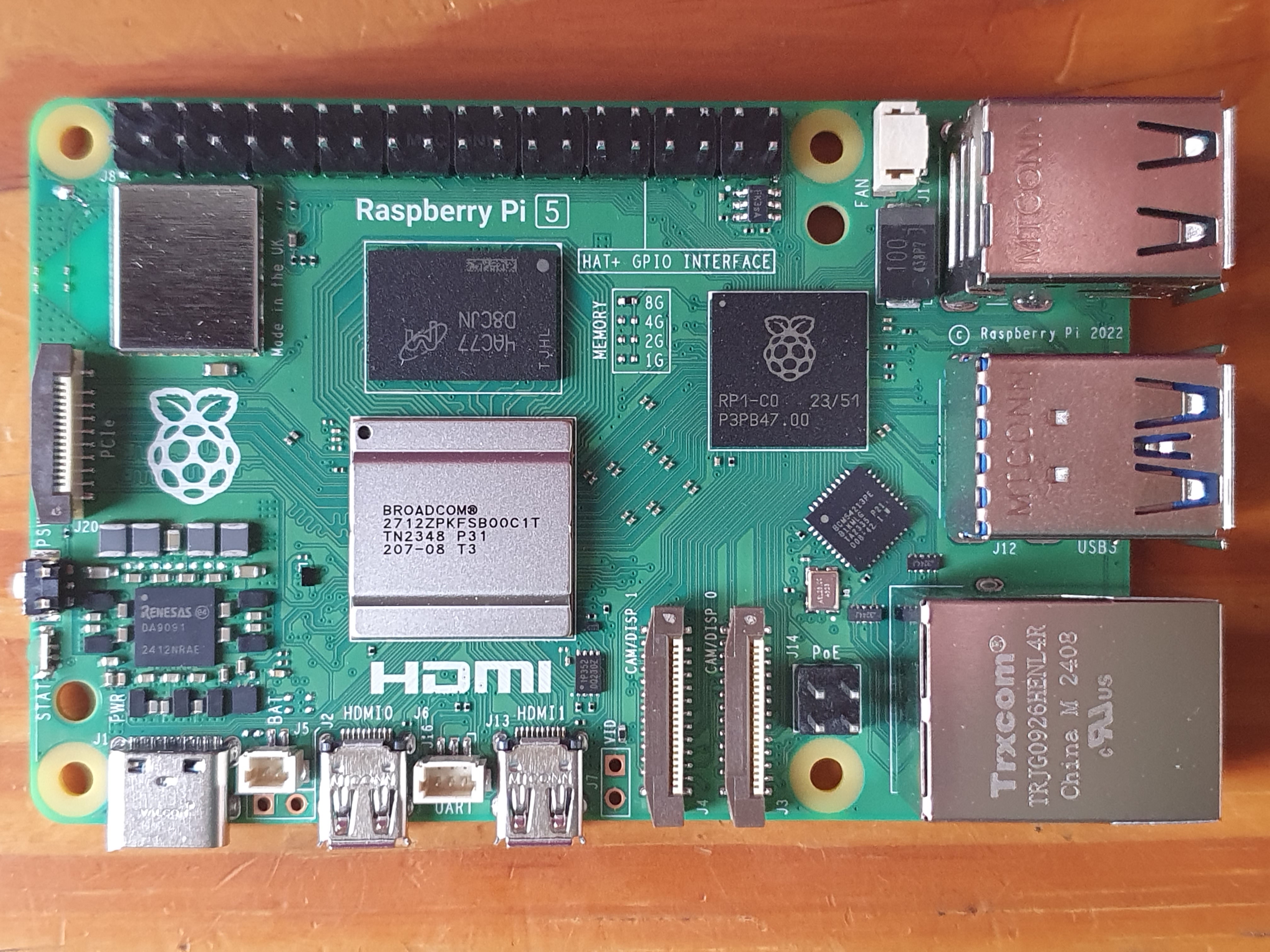

The Raspberry Pi 5

To be honest, I never have given anything bar the Zero series of the Raspberry Pi much attention. The Zero series are nearly as cheap as you can buy for a full fat Linux computer, and as this virtual diary has shown, I have a whole bunch of them. At €58 inc VAT each to include PoE and a case, they’re still not cheap, but they are as cheap as you can go unless you go embedded microcontroller, but then that’s not a full fat Linux system.

The higher end Raspberry Pi’s are not good value for money. Mine the 8Gb RAM model with PoE, no storage and a case cost €147 inc VAT. I bought Henry’s games PC for similar money, and from the same vendor I can get right now an Intel N97 PC with a four core Alder Lake CPU in a case and power supply with 12Gb of RAM and a 256Gb SATA SSD for €152 inc VAT. That PC has an even smaller footprint than the RaspPi 5 (72 x 72 x 44 vs 63 x 95 x 45), it’s smaller in two dimensions and has proper sized HDMI ports not needing an annoying adapter. It even comes with a genuine licensed Windows 11 for that price. That’s a whole lot of computer for the money, it’ll even do a reasonable attempt at playing Grand Theft Auto V at lowest quality settings. The Raspberry Pi is poor value for money compared to that.

However, cheap monthly colocation costs so long as you supply a Raspberry Pi makes them suddenly interesting. I did a survey of all European places currently offering cheap Raspberry Pi colocation that I could find:

- €4.33 ex VAT/month https://shop.finaltek.com/cart.php?a=confproduct&i=0. Czechia. 100 Mbit NIC ‘unlimited’. Price includes added IPv4 address for extra cost, which you need to explicitly add (it costs nearly €2 extra per month, so if you can make do with IPv6 only, that would save a fair bit of money).

- €5.67 ex VAT/month $6 http://pi-colocation.com/. Germany. 10 Mbit NIC unlimited. Client supplies power supply.

- €6.00 ex VAT/month £5 https://my.quickhost.uk/cart.php?a=confproduct&i=0. UK. 1Gbit NIC 10Tb/month. Max 5 watts.

- €6.81 ex VAT/month https://raspberry-hosting.com/en/order. Czechia. 200 Mbit NIC ‘unlimited’. Also allows BananaPi.

- €14.60 ex VAT/month £12.14 https://my.quickhost.uk/cart.php?a=confproduct&i=0. UK. 1Gbit NIC 10Tb/month. Max 10 watts.

There are many more than this, but they’re all over €20/month and for that I’d just rent a cheap dedicated server and save myself the hassle.

So these questions need to be answered:

- Is a Raspberry Pi 5 sufficiently better than my Intel Atom servers to be worth the hassle?

- What if it needs to run within a ten watt or five watt power budget?

- Can you even get an Ubuntu LTS with ZFS on root onto one?

Testing the Raspberry Pi 5

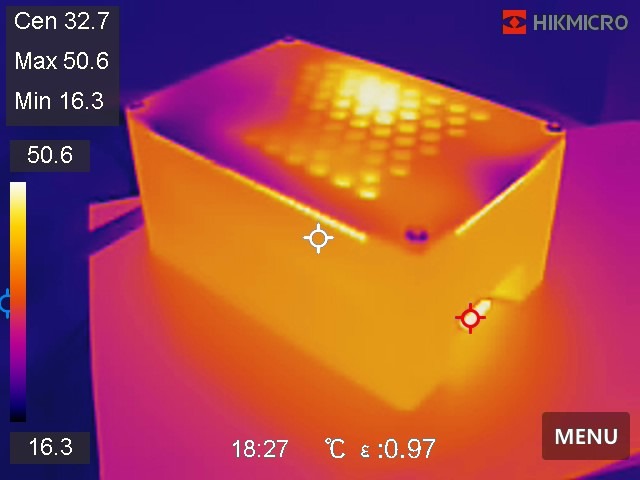

As you can see in the photo, unfortunately I got the C1 stepping, not the lower power consuming D0 stepping which consumes exactly one watt less of power. The case was a bundle of a full size NVMe SSD M.2 slot with PoE power HAT, official RaspPi 5 heatsink and cooler, and an aluminium case to fit the whole thing. It looks pretty good, and I’m glad it’s metal because the board it runs hot:

This is it idling after many hours, at which it consumes about 5.4 watts of which I reckon about 1.5 watts gets consumed by the PoE circuitry (I have configured the fan to be off if the board is below 70 C, so it consumes nothing. I also don’t have the NVMe SSD yet, so this is minus the SSD). The onboard temperature sensor agrees it idles at about 65 C with the fan off.

After leaving it running stress-ng --cpu 4 --cpu-method fibonacci

for a while the most power I could make it use is 12.2 watts of PoE

with the fan running full belt and keeping the board at about 76 C.

You could add even more load via the GPU, but I won’t be using the

GPU if it’s a server. As with all semiconductors, they use more

power the hotter they get, however I feel this particular board

is particularly like this. It uses only 10.9 watts when cooler, the

additional 1.3 watts comes from the fan and heat. How much of this

is my particular HAT or the Raspberry Pi board itself, I don’t know.

Is a Raspberry Pi 5 sufficiently better than my Intel Atom servers to be worth the hassle?

First impressions were that this board is noticeably quick. That got me curious: just how quick relative to other hardware I own?

Here are SPECINT 2006 per Ghz per core for selected hardware:

- Intel Atom: 77.5 (my existing public server, 2013)

- ARM Cortex A76: 114.7 (the RPI5, CPU first appeared on the market in 2020)

- AMD Zen 3: 167.4 (my Threadripper dev system, 2022)

- Apple M3: 433 (my Macbook Pro, 2024)

Here is the memory bandwidth:

- Existing public server: 4 Gb/sec

- Raspberry Pi 5: 7 Gb/sec

- My Threadripper dev system: 37 Gb/sec

- My Macbook Pro: 93 Gb/sec

(Yeah those Apple silicon systems are just monsters …)

For the same clock speed, the RPI 5 should be 50-75% faster than the Intel Atom. Plus it has twice as many CPU cores, and a NVMe rather than SATA SSD, and possibly 40% more clock speed so it should be up to 2x faster single threaded and 4x faster multithreaded. I’d therefore say the answer to this question is a definitive yes.

(In case you are curious, the RaspPi5 is about equal to an Intel Haswell CPU in SPECINT per Ghz. Some would feel that Haswell was the last time Intel actually made a truly new architecture, and that they’ve been only tweaking Haswell ever since. To match Haswell is therefore impressive)

What if it needs to run within a ten watt or five watt power budget?

I don’t think it’s documented anywhere public that if you tell this board that it’s maximum CPU clock speed is 1.5 Ghz rather than its default 2.4 Ghz, it not only clamps the CPU to this but it also clamps the core and GPU and everything else to 500 Mhz. This results in an Intel Atom type experience, it’s pretty slow, but peak power consumption is restrained to 7.2 watts.

Clamping maximum CPU clock speed to one notch above at 1.6 Ghz appears to enable clocking the core up to 910 Mhz on demand. This results in a noticeably snappier use experience, but now:

- @ max 1.6 Ghz, peak power consumption is 9.1 watts.

- @ max 1.8 Ghz, peak power consumption is 9.4 watts.

- @ max 2.0 Ghz, peak power consumption is 9.6 watts.

The NVMe SSD likely would need half a watt at idle, so 1.8 Ghz is the maximum if you want to fit the whole thing including PoE circuitry into ten watts.

The board idles above five watts, so using the max five watt package listed above is not on the radar at all. So would the Raspberry Pi 4 with a PoE HAT, so you’d need to use no better than an underclocked Raspberry Pi 3 to get under five watts. That uses an ARM Cortex A53, which is considerably slower than an Intel Atom.

Finally, you get about twenty seconds of four cores @ 1.6 Ghz before the temperature exceeds 70 C. As the fan costs watts and a hot board costs watts, it makes more sense to reduce the temperature at which the board throttles to 70 C. Throttling drops the CPU initially to 1.5 Ghz, then it’ll throttle the core to 500 Mhz and the CPU to 1.2 Ghz. It’ll then bounce between that and 1.6 Ghz as temperature drops below and exceeds 70 C. I reckon power consumption at PoE is about 7.2 watts. If you leave it at that long enough (> 30 mins), eventually temperature will exceed 75 C. I have configured the fan to turn on slow at that point, as I reckon the extra watts for the fan is less than extra watts caused by the board getting even hotter.

In any case, I think it is very doable indeed to get this board with NVMe SSD to stay within ten watts. You’ll lose up to one third of CPU performance, but that’s still way faster than my existing Intel Atom public server. So the answer to this question is yes.

Can you get Ubuntu LTS with ZFS on root onto one?

The internet says yes, but until the NVMe drive arrives I cannot confirm it. I bought off eBay a used Samsung 960 Pro generation SSD for €20 including delivery. It’s only PCIe 3.0 and 256 Gb, but the RaspPi 5 can only officially speak single lane PCIe 2.0 though it can be overclocked to PCIe 3.0. So it’ll do.

When it arrives, I’ll try bootstrapping Ubuntu 24.04 LTS with ZFS on root onto it and see what happens. I’ve discovered that Ubuntu 24.04 uses something close to the same kernel and userland to Raspbian, so all the same custom RPI tools and config files and settings work exactly the same between the two. Which is a nice touch, and should simplify getting everything up and working.

Before it goes to the colocation …

I’ve noticed that maybe one quarter of the time rebooting the Pi does not work. I think it’s caused by my specific PoE HAT, other people have also reported the same problem for that HAT. Managed PoE switches can of course cut and restore the power, so it’s not a problem but I’d imagine setting it up and configuring it remotely wouldn’t be worth the pain. It would be better to get it all set up locally, make sure everything works, and go from there.

In any case, I’d like to add load testing, so maybe have some sort of bot fetch random pages continuously to see what kind of load results and whether it can continuously saturate a 1 Gbit ethernet even with the ZFS decompress et al running in the background. I think it’ll more than easily saturate a 1 Gbit ethernet, maybe with half of each of the four cores occupied. We’ll find out!

There is also the question whether my Mailcow software stack would be happy running in Docker on Aarch64 rather than in x64. Only one way to find out. Also, does ZFS synchronisation work fine between ARM and Intel ZFS sets? Also only one way to find out.

Finally, after all that testing is done, I’ll need to take a choice about which colocation to choose. The 10 Mbit capped NIC option I think is definitely out, that’s too low. That means a choice between the 1 Gbps ten watt constrained option, or the 200 Mbit unconstrained option for nearly exactly half the price.

The ten watt constrained option is a bit of a pain. In dialogue with their tech people, they did offer 95% power rating, so they’d ignore up to 5% of power consumption above ten watts. However, that Samsung SSD can consume 4 watts or so if fully loaded. If it happens to go off and do some internal housekeeping when the RaspPi CPU is fully loaded, that’s 13 watts. Depending on how long that lasts (I’d assume not long given how often we’d do any sustained writes), they could cut the power if say we exceeded the ten watt max for more than three minutes within an hour. That feels … risky. Plus, I lose one third of CPU power due to having to underclock the board to stay within the power budget.

Given that there would be no chance of my power getting cut, I think I can live with the 200 Mbit NIC. I wish it were more, but it’s a lot better than a 100 Mbit NIC. How often would I do anything needing more than 20 Mb/sec bandwidth? Mainly uploading diary posts like this, and so long as we’re in the rented accommodation our internet has a max 10 Mbit upload, which is half of that NIC cap. Cloudflare takes care of the public web server, so for HTTP NIC bandwidth doesn’t really matter except for fetching cold content.

Anyway, decision on colocation is a few weeks out yet. Lots more testing beckons first.

To conclude, the Raspberry Pi 5 looks like a great cheap colocated server solution. But only because providers special case a Raspberry Pi with extra low prices. If they only special cased anything under twenty watts with the same price, I’d be sending them an Intel N97 mini PC and avoid all this testing and hassle with the Raspberry Pi.

| Go to previous entry | Go to next entry | Go back to the archive index | Go back to the latest entries |