Welcome to ned Productions (non-commercial personal website, for commercial company see ned Productions Limited). Please choose an item you are interested in on the left hand side, or continue down for Niall’s virtual diary.

Niall’s virtual diary:

Started all the way back in 1998 when there was no word “blog” yet, hence “virtual diary”.

Original content has undergone multiple conversions Microsoft FrontPage => Microsoft Expression Web, legacy HTML tag soup => XHTML, XHTML => Markdown, and with a ‘various codepages’ => UTF-8 conversion for good measure. Some content, especially the older stuff, may not have entirely survived intact, especially in terms of broken links or images.

- A biography of me is here if you want to get a quick overview of who I am

- An archive of prior virtual diary entries are available here

- For a deep, meaningful moment, watch this dialogue (needs a video player), or for something which plays with your perception, check out this picture. Try moving your eyes around - are those circles rotating???

Latest entries:

Word count: 2534. Estimated reading time: 12 minutes.

- Summary:

- The site’s fibre broadband connection was switched from Eir to Digiweb, resulting in a change in ping times and throughput. Packet loss and latency spikes were observed during peak residential traffic hours in the evening with Digiweb, but were steady and flat outside of this period. The new connection is shared between multiple customers, with a contention ratio of 32:1 for residential connections.

Friday 13 February 2026: 11:46.

- Summary:

- The site’s fibre broadband connection was switched from Eir to Digiweb, resulting in a change in ping times and throughput. Packet loss and latency spikes were observed during peak residential traffic hours in the evening with Digiweb, but were steady and flat outside of this period. The new connection is shared between multiple customers, with a contention ratio of 32:1 for residential connections.

Firstly, can you believe it’s been two years since fibre broadband was installed into the site? It was in January 2024, a few months after fibre broadband to the home (FTTH) was installed into the site’s village and became available. Before that, I had for the preceding six months run a Starlink satellite broadband which was not terrible, but it was a power hog (130w!) and it had a constant, though very low, rate of packet loss due to satellites moving around. Starlink is very definitely better than 4g based internet, probably better than vDSL, but not as good as a fibre connection which is very hard to beat if the underlying backhaul is up to snuff.

Because FTTH had only just been made available, at the time only Eir could see that the property had the possibility of fibre installation. Also, at that time, fibre broadband was expensive across the board, and a once off installation fee of €150-200 was common. If I went with an expensive Eir 1 Gbps business connection with a 24 month contract, they would do the first time installation for free, and at that time the monthly fee of €78.62 inc VAT was not terrible (Starlink’s was €61.50 inc VAT excluding the dish, and I remember most fibre broadband back then was around the €60-65 per month mark).

Obviously, where they catch you is (a) 24 months of paying €10-15 more per month easily pays for the ‘free’ installation and (b) prices for fibre broadband were surely going to drop over those two years, but you’d be locked into the 24 month contract. And that’s exactly how things played out: ten months ago I had fibre broadband installed into my rented home which had free first time installation and yet a monthly fee of just €35 inc VAT with a twelve month contract. That was a 500 Mbps connection, and the new contract at the site is for a 1 Gbps connection for a mere €30 inc VAT monthly for a 12 month contract. That’s a lot of forward progress on bandwidth per euro in just two years, we are doing better than doubling the value per euro per year!

That of course makes you wonder about the quality of the network as that would be the obvious place to economise. The Layer 3 backhaul for both the site and my rented home is OpenEir, however each ISP runs its own Layer 4 on top. Some providers (Eir, Sky) use straight DHCP like a LAN, however most appear to use PPPoE which is unfortunate, as it is inferior, and as far as I can tell there is no good reason to continue to do so in modern systems especially as the username and password is identical for all customers in an ISP. I assume it’s a legacy systems thing, a left over from ADSL days, perhaps because their billing and management systems won’t then need upgrading.

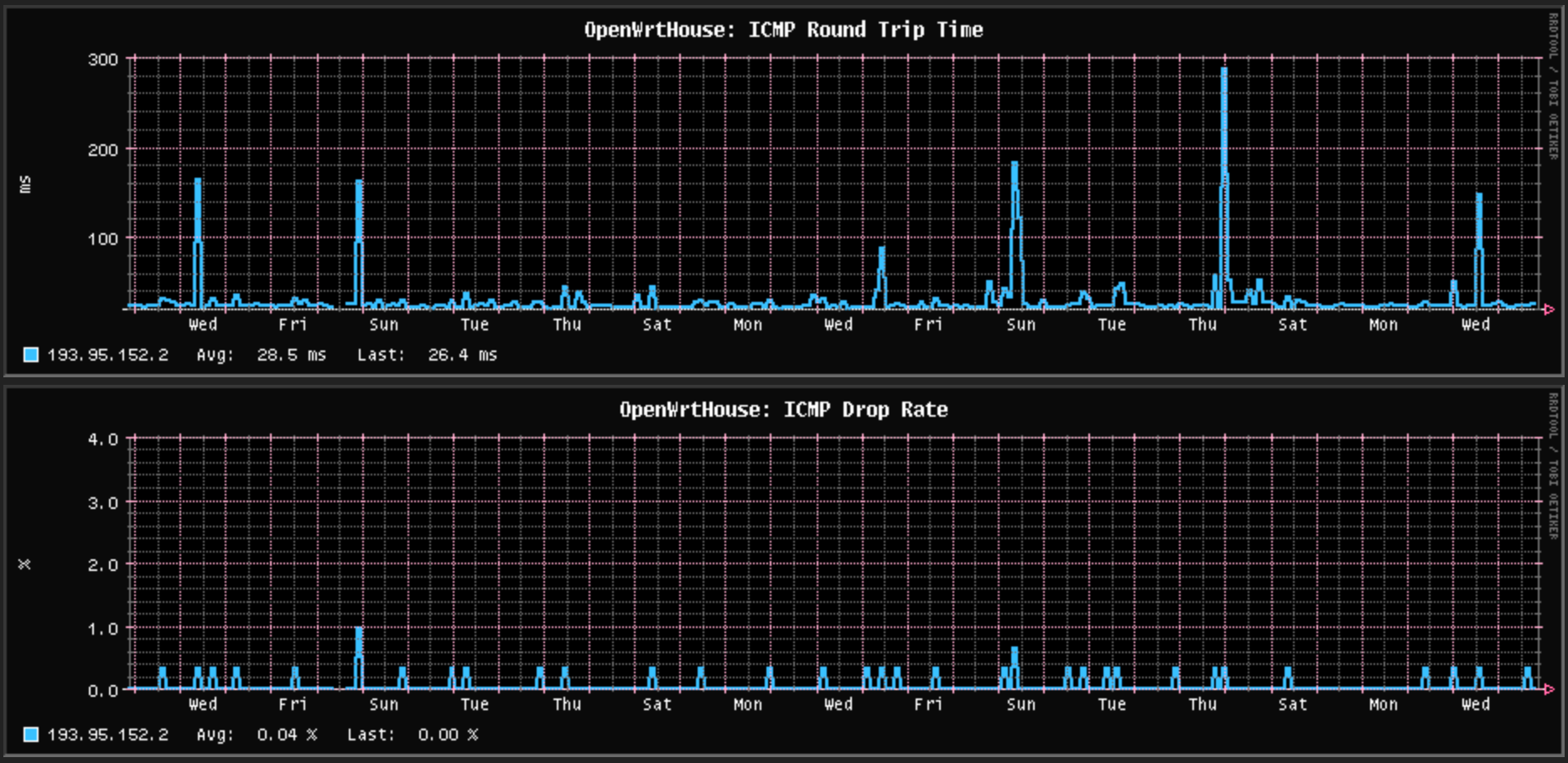

As noted when I installed the fibre broadband into my rented house, there are random bursts of packet loss and ping time spikes for the rented home fibre broadband connection. I don’t know if that’s the ISP (Pure Telecom) which uses BTIreland for Layer 3 backhaul, or the G.hn powerline network between the Fibre ONT and my outermost router, but in any case it persisted over most of this year only suddenly getting better from December onwards, and it now looks like this:

The past month of packet loss and latency spikes with the rented home fibre broadband

This is actually much better than it was for the majority of last year – there was far more ping time noise and it meant constant spikes in standard deviation while the connection was idle. Since December, that noise is so reduced it doesn’t show up in standard deviation even when the connection is downloading something at maximum speed, and I haven’t changed anything in the house so I assume Pure Telecom/BTIreland fixed something.

Obviously I only have a few hours of Digiweb ping times to look at, however so far I’d say they look a little more noisy that Pure Telecom’s during the peak residential traffic hours in the evening, but outside that they’re pretty much a steady flat 8-9 ms. There hasn’t been enough time to see if any ping requests get dropped.

The past day of packet latency with the new site fibre broadband

Eir also had a flat as a pancake ping times (8.8 to 9.2 ms with a very occasional spike to … wait for it … 10.1 ms! every two months or so), but unlike Digiweb that was the case all day long every day with no sensitivity to evenings. However the Eir package was a business connection where its traffic gets priority over residential traffic, so it’s not surprising that ping times would be so consistent when you’re not competing with much other traffic at all.

Anyway, to the benchmarking! Here are the round trip times for each of those ISPs to various locations around the world, and remember lower is better for this graph:

As empirically tested in the article about the G.hn powerline adapters, they have a configuration option which lets you choose between power conserving and performance. I have mine on power conserving, so they go to sleep in between ping packets and thus they add ~18 milliseconds to ping times. In fact, if you can get the traffic rate up a bit, they won’t go to sleep and ping times drop dramatically, so the above graph looks worse than it is if you were maxing out a download. Where the G.hn powerline adapters particularly impact things is throughput which is basically capped to ~100 Mbps per connection, so you’ll need to use multiple connections to max out the speed. As all the locations will see 85 - 100 Mbps in this benchmark no matter where in the world, I left off the Pure Telecom results for this graph comparing single connection throughput to the same locations around the world:

This is with the default Linux TCP receive window of 3 Mb which I used as most people don’t think of fiddling with that setting on their edge routers. As you can see, Eir and Digiweb are very similar at distance, Digiweb is a good bit worse to London and Czechia, better to Paris and about equal to Amsterdam. This exactly matches the RTT ping time difference above, so these are exactly the results you would expect given those ping times.

So why are the ping times so different? Eir peers with Twelve99 in Dublin, it routes via AS1273 Vodafone/Cable & Wireless straight into Central Europe, and it is therefore close to Czechia and a bit further away from London and Paris. Both Pure Telecom via BTIreland and Digiweb via their connectivity provider Zayo route to London, and then via Paris to the final destination. Eir routes US traffic using Hurricane Electric via Amsterdam, whereas both BTIreland and Zayo route to the US via London. Interestingly, Eir is slightly faster to reach Los Angeles despite Amsterdam being further away spatially.

In short, routing data the cheapest way is not the fastest way, and packets can take longer than optimum journeys over space to get to their destinations. We can thusly conclude:

- As all fibre broadband in Ireland apart from Eir always goes to INEX Dublin, it is always min 10 milliseconds to get anywhere.

- As all traffic apart from Eir leaving Ireland always goes to London first, it is always min 18 milliseconds to get anywhere outside Ireland.

- As all traffic reaching continental Europe takes at least 25 milliseconds to get there thanks to all the switching and distance, you’re already on a relatively high latency connection by definition (in case you were interested, internet traffic runs at 55-65% the speed of light between Ireland and Europe/US, with the maximum possible speed in fibre optics being 68% the speed of light). Continental Europe, in terms of internet cables, is a minimum 1,200 km away in the best case. Light within glass takes what it does to traverse that distance (about 17 ms).

The reason I’m raising minimum latencies to get anywhere is because the default maximum TCP receive window of 3 Mb in Linux creates the following theoretical relationship of throughput to latency:

In other words, to achieve 1 Gbps in a single connection with a 3 Mb TCP receive window, your RTT ping latency cannot exceed about 17 ms. Or, put another way, the only way you’ll see your full 1 Gbps per single connection is if you exclusively connect to servers either in Ireland or Britain only.

As the graph above suggests, increasing your TCP receive window to 8 Mb increases your RTT ping latency maximum for a 1 Gbps per single connection to 45 ms which is enough to cover most of continental Europe. In case you’re thinking why not increase it still further?, you’ll find that the server will also have its own maximum send window, and a very common maximum is 8 Mb at the time of writing. Increasing your receive window past the sender’s window does not result in a performance gain, and the larger your receive window the more latency spikes you’ll see because the Linux kernel has to copy more memory around during its garbage collection cycles. So you can actually start to lose performance with even larger windows, especially on the relatively slow ARM Cortex A53 in order CPUs typical on router hardware.

Thankfully Linux makes increasing the TCP receive window

to 8 Mb ludicrously easy. Just add this to /etc/sysctl.conf:

net.ipv4.tcp_rmem = 4096 131072 16777216

This will work on any kind of recent Linux including OpenWRT and you almost certainly should configure your edge router this way if you have sufficient RAM for it to make sense. Linux will dynamically allocate up to 16 Mb of RAM per connection for the TCP receive window, of which up to 50% forms the TCP receive window. Recent Linuces will automatically scale the window size and the memory consumed based on each individual connection so you don’t have to do more to see a 2x to 3x throughput gain from a single line change. In case you’re wondering what happens if there are thousands of connections all consuming 16 Mb of RAM each on a device with no swap, you can relax as Linux will clip the maximum RAM per connection automatically if free RAM gets tight. Equally, this means that changing this parameter will only have an effect on router hardware with plenty of free RAM. Still, you can set this and nothing is going to blow up, it’ll just enter a slow path under load on RAM constrained devices.

1 Gbps broadband appears to be the price floor as of this year in Ireland – the 500 Mbps service is barely cheaper if it is cheaper at all (for the site when I ordered the Digiweb package their 500 Mbps and 1 Gbps packages were identically priced under a ‘New Year special offer’), and from my testing above it would seem that at least both Eir and Digiweb are providing a genuine true 1 Gbps downstream from the public internet, albeit obviously shared between however many residential customers at a time. The next obvious step for next year’s competitive landscape is a new price floor of 2 Gbps for residential fibre broadband where it doesn’t cost much more than 1 Gbps. OpenEIR was built with up to 5 Gbps per residential user in mind, after that things would get a bit tricky technically speaking. But, to be honest, I find 2.5 Gbps ethernet LAN more than plenty, and my planned fibre backhaul for my house is all 2.5 Gbps based principally because (a) it’s cheap (b) it’s low power and (c) again, genuinely, do you really ever need more than 2.5 Gbps except on the very occasional case of copying a whole laptop drive to backup?

The Bluray specification maxes out at 144 Mbps though few content ever reaches that – a typical 4k Ultra HDR eight channel video runs at about 100 Mbps. High end 4k video off the internet uses more modern compression codecs, and typically peaks at 50 Mbps. You could handily run twenty maximum quality Bluray video streams, or forty maximum quality Netflix video streams on a 2 Gbps broadband connection. As most households would probably never run more than four or five of those concurrently (and usually far less), I suspect the residential market will mainly care about guaranteed minimum 100 Mbps during peak evening hours rather than maximum performance in off peak hours.

That brings us back to contention and how densely is backhaul shared across residential homes. Back in vDSL days, I paid the extra for a business connection into my rented house because vDSL broadband became noticeably sucky each evening, so by paying extra for my traffic to be prioritised over everybody else’s I had good quality internet all day long. Fibre to the cabinet (FTTC) which was what vDSL was typically had 48:1 contention ratios for residential connections, but 20:1 plus priority traffic queue for business connections. I had assumed that fibre broadband would have a similarly sucky experience in the evenings, but so far it’s been fine with Pure Telecom in my rented house. Time will tell for Digiweb at the site.

OpenEir uses a contention ratio of 32:1 for residential connections, but that’s of a 10 Gbps link so you always get a guaranteed minimum of 312 Mbps per connection. As noted above, due to the G.hn powerline in between the ONT in my rented house we are capped to about that in any case, so it’s unsurprising I haven’t noticed any performance loss in the evenings. 312 Mbps is of course plenty for several concurrent 4k Netflix video streams, so I suspect so long as streaming video never stutters, 99.9% of fibre broadband users will be happy.

In fairness to governments, though it took them twenty years, they do appear to have finally solved ‘quality residential internet’ without any major caveats. I remember paying through the nose for cable based internet in Madrid back around the year 2000. It was the fastest package they had at 1 Mbps, and you usually got about 75 Kb/sec downloads off it. Back then hard drives were small, so you basically had it downloading 24-7 and you wrote out content to DVDs – I remember hauling a very heavy backpack stuffed with DVDs through the airport when I emigrated back to Ireland. A different era!

Word count: 1621. Estimated reading time: 8 minutes.

- Summary:

- The solar panel mounting kit was purchased from VEVOR for €55 inc VAT delivered each, and it consisted of two aluminium brackets made from 6005-T5 aluminium alloy, with a length of 1.27 metres, depth of 5 cm, and width of 3 cm. These brackets were stronger than expected and could withstand a load of up to 6885 Newtons before buckling.

Tuesday 10 February 2026: 10:37.

- Summary:

- The solar panel mounting kit was purchased from VEVOR for €55 inc VAT delivered each, and it consisted of two aluminium brackets made from 6005-T5 aluminium alloy, with a length of 1.27 metres, depth of 5 cm, and width of 3 cm. These brackets were stronger than expected and could withstand a load of up to 6885 Newtons before buckling.

In the meantime, I’ve been trying to coax my architect into completing the Passive House certification work which had been let languish these past two years as until the builder and engineer had signed off on a completely complete design, there was no point doing the individual thermal bridge calculations as some detail might change. So all that had gone on hiatus until basically just before this Christmas just passed. My architect feels about thermal bridge calculations the same way as I feel about routing wires around my 3D house design i.e. we’d rather do almost anything else, but we all have our crosses to bear and when you’re this close to the finish line, you just need to keep up the endurance and get yourself over that line. It undoubtedly sucks though.

I completed a small but important todo item this week which was to complete the roof tile lifting arm + electric hoist solution shown in the last post by creating a suitable lifting surface. This is simply a mini pallet with the wood from an old garden bench whose metal sides rusted through screwed into it – the wood is a low end hard wood and must be easily a decade old now, but as it had no rot in it when I cut up the garden bench, I kept it and it’s now been recycled into usefulness – though I suspect that this use will be its last hurrah, as all those concrete tiles are going to batter the crap out of it:

As that’s hard wood, it’ll take more abuse than the soft pallet wood, and I even used the rounded edged lengths at the sides to reduce splintering when loading and unloading. I have a lifting hoist I’ll thread around and through it, and it should do very well if we keep the weight under 125 kg which is the limit for the electric hoist in any case.

Another a small but important todo item was to solve how to mount solar panels onto the wall. We have six solar panels mounted on the south wall which act as brise soleil for the upstairs southern windows:

In the past three years I had not found an affordable and acceptable solution for how to mount those panels because I specifically did NOT want to use steel brackets, as those would produce rust stains running down the wall after a few years. I had consigned that problem to one that I’d probably have to fabricate my own brackets by hand from something like aluminium tube, so I was delighted to stumble across an aluminium solar panel mounting kit on VEVOR a few weeks ago. For €55 inc VAT delivered each you get two of these:

Actually, the bottom cross bar is an addition manufactured by me from 20x20x1.5 square aluminium tube, but I’ll get onto that in a minute. These VEVOR brackets are made from 6005-T5 aluminium alloy, are 1.27 metres long, 5 cm deep and 3 cm wide. They come with 304 stainless steel M8 bolt fasteners. I very much doubt that I could have made each for less than €28 each, and it would have taken me days to make all of them given I spent six hours making just the bottom cross bars alone. So I have saved both time and money here, which is always delightful.

Which brings me to cross bars. The outer brackets are very strong, and being braced at at least two occasions during their lengths I have zero concerns about them. This raises the cross bar: it is a 2 mm thick 570 mm long profile 30 mm on one side and 20 mm on the other side. Using Euler’s buckling load formula:

… using the appropriate values for 6005-T5 aluminium alloy, and for which I, the minimum second moment of area, is the hardest part to calculate, and for a right angle length I reckon that is:

… you’d expect a maximum load before buckling of: 6885 Newtons.

(I checked my minimum second moment of area calculation using the much less simplified https://calcs.com/freetools/free-moment-of-inertia-calculator, and it is about right)

6885 Newtons looks plenty strong enough. Let’s check it: the solar panels have an area of about 1.8 m2 and can take a wind load of up to 4000 Pa before disintegrating. We would have five brackets for four panels so our design load needs to be 4000 Pa x 1.8 * 4 / 5 = 5760 Newtons. If the crossbar were at the far end, you would halve that load between the top M8 bolt and the bottom crossbar, but because we’re mounting these on a wall and not on the ground, and because we need the panels to be at a 35 degree angle, the crossbar HAS to be most of the way up the two side arms. Indeed, if you look again at the photo above where the angle is correctly set to 55 degrees so the panels are at 35 degrees, the crossbar is about one third from the top. This is effectively a lever, and I reckon that it would amplify the force on the crossbar by about double, which would buckle it if the panel ever experienced a 1673 Pa wind gust.

Here are the worst recorded wind gusts ever (with pressure calculated by (P = 0.613 × V2):

- Worst in Ireland: 184 km/hr, 51 m/s = 1594 Pa

- Worst on land: 408 km/hr, 113 m/s = 7827 Pa

- Worst hurricane at sea: 406 km/hr, 113 m/s = 7827 Pa

- Worst tornado: 516 km/hr, 143 m/s = 12535 Pa

However, at an angle of 35 degrees, 0.57 of a horizontal wind pressure would apply to a panel, so not even 1000 Pa would ever land on a panel in the worst wind gust ever recorded in Ireland. So on that basis, that little cross bar should be more than plenty in real world conditions.

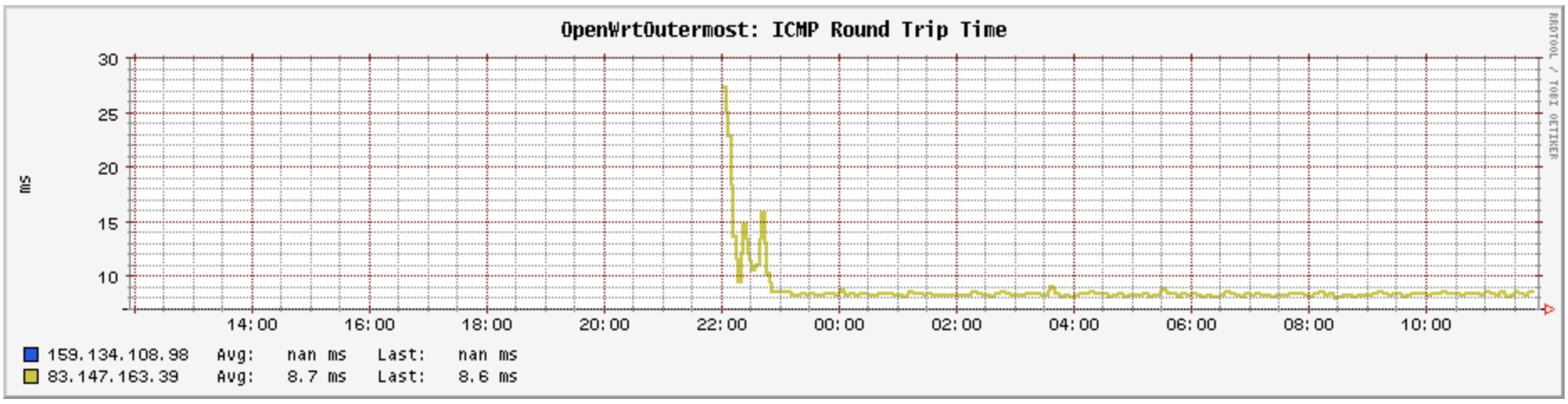

If these were steel brackets, we’d be done, but steel is unusual in the world of materials: it has a weird fatigue endurance curve. I’m going to borrow this graph from https://en.wikipedia.org/wiki/Fatigue_limit as it’s hard to explain in words:

Fatigue endurance of steel compared to aluminium over stress cycles

Most materials are like aluminium in that as you repeatedly flex them, their strength decreases as the number of flex cycles increases. This makes sense intuitively: imagine little tiny fibres in a rope breaking with each flex, and over time the rope loses strength. Steel however greatly slows down its strength loss after a million flexes, which is one of the big reasons so much structural stuff in modern society is made from steel: almost nothing as cheap and easy to mass produce as steel has this property. Hence your cars, houses, bridges, screws, bolts, nails etc anything which sees lots of repeated flex tends to be made from steel. Why is steel like this? It comes down to orientation of crystalline structure, but I’m getting well off the reservation at this point, so go look it up if you’re interested.

In any case, my brackets will be up in the wind getting repeatedly flexed, and that little cross bar would be getting flexed a lot. So while it might last five or even ten years, I had my doubts it would last until my death and those brackets will be an absolute pain to get to once the greenhouse is up. So I decided to add a second, longer, crossbar which you saw in the photo above.

For that I purchased eight one metre lengths of raw 20x20x1.5 square tube made out of 6060-T6 aluminium alloy. 6060-T6 is about half as strong as 6005-T5, but it didn’t matter for this use case and it was cheap at €4 inc VAT per metre. I drilled out holes for M8 stainless steel bolts, and voilà, there is the bracket above which is so strong that me throwing all my body weight onto it doesn’t make it flex even in the slightest. No flexing at all in any way is the ideal here as it maximises lifespan, so only the very slow corrosion of the aluminium will eventually cause failure.

Out of curiosity I calculated this second crossbar’s buckling load:

A 304 stainless M8 bolt will shear at 15,079 Newtons, so the top bolt of the bracket is fine. Assuming an even distribution of 5760 Newtons, that is 2880 Newtons on each end, a safety factor of 50% if the middle crossbar were not fitted. If I were not fitting the middle crossbar, I probably would have used 25 mm sized tube, because buckling strength is related to dimension cubed, it would be a very great deal stronger.

I will be fitting the middle cross bar however, but more to prevent any side flex of the side brackets than anything else. The far bigger long term risk here is loss of strength over time due to flex, and that middle cross bar does a fabulous job of preventing any flex anywhere at all. In any case, this bracket is now far stronger than necessary, it would take a 12 kN load which is far above when the panels would fall apart. I think they’ll do just fine.

Word count: 5240. Estimated reading time: 25 minutes.

- Summary:

- The 400b? full fat Claude Sonnet 4.5 model does by far the best summary, while the Qwen3 30b model is obviously a lot more detailed than the Llama3.1 8b model but misbalances what detail to report upon and what to leave out. The Llama3.1 8b produces a short summary consisting of only what it thinks are the bare essentials, and I would personally say it’s a fairly balanced summary choosing a fair set of things to report in detail and what to omit.

Friday 30 January 2026: 19:39.

- Summary:

- The 400b? full fat Claude Sonnet 4.5 model does by far the best summary, while the Qwen3 30b model is obviously a lot more detailed than the Llama3.1 8b model but misbalances what detail to report upon and what to leave out. The Llama3.1 8b produces a short summary consisting of only what it thinks are the bare essentials, and I would personally say it’s a fairly balanced summary choosing a fair set of things to report in detail and what to omit.

Still, that’s expensive for a shell, you’d expect about €1,000-1,200 inc VAT per m2 for a NZEB build. Obviously we have one third better insulation which accounts for most of the cost difference, but some of the rest of the cost difference is the considerable steel employed to create that large vaulted open space. We should use 4.3 metric tonnes of the stuff, and thanks to EU carbon taxes steel is very not cheap in Ireland.

To get it to weathertight, I expect glazing will cost €80k inc VAT or so. I have no control over that cost, so it is what it is. The outer concrete block I also have no control over that cost, blocks cost at least €2 inc VAT each and the man to lay them is about the same. The QS thinks that the exterior leaf will cost €25k, and the render going onto it €49k – I have no reason to disbelieve him, and again I have no control over that cost, so it is what it is.

Where I do have some control is for the roof. The QS budgeted €46k for that. I think if we drop the spec from fibre-cement tile to cheapest possible concrete tile and I fit the roof myself I can get that down by €30k or so. I put together this swinging arm and electric winch for lifting up to 100 kg of tiles at a time to the roof, it clamps onto scaffolding as you can see, and this should save us a lot of time and pain:

Put together for under €150 inc VAT delivered, the arm can extend to 1.2m and can lift up to 300 kg. The winch can only manage 250 kg at half speed, 125 kg at full speed.

Saving 30k on the roof doesn’t close the funding gap to reach weathertight, but it does make a big difference. It would be super great if a well paid six month contract could turn up soon, but market conditions are not positive: there is a very good chance I’ll have zero income between now and when the builder leaves the site.

I have no idea from where I’ll find the shortfall currently. What I do know is that next year planning permission expires as it’ll have been five years since we got the planning permission. We need the building to be raised and present ASAP. We’ll just have to hope that the tech economy improves, for which I suspect we need the AI bubble to pop so the tech industry can deflate and reinflate and the good work contracts reappear. I’ll be blunt and say I find it highly unlikely it can pop and recover within the time period we need, so I just don’t know. Cross that bridge when we get to it.

Anyway, turning to more positive topics, as there has been forward progress on the house build I’ve forced myself to work further on the services layout as I really hate doing them, so me forcing myself to get them done is a very good use of my unemployment time. Witness the latest 3D house model with services laid out:

I use a free program for this called Sweet Home 3D which has a ‘Wiring’ Plugin which lets you route all your services. The above picture overlays all the service layers at once which is overwhelming detail, however each individual service e.g. ventilation, or AC phase 1, is on its own individual layer. You can thus flip on or off whatever you are currently interested in. This 3D model is made in addition to the schematic diagrams drawn using QElectroTech which I previously covered here, and both are kept in sync. The schematic diagrams are location based, so if you’re in say Clara’s bedroom you can see on a single page all the services in there. You can get the same thing from the 3D model by leaving all the layers turned on an looking at a single room – and sometimes that is useful especially to see planned routing of things – but in the end both models do a different thing and both will save a lot of time onsite when the day comes.

I am maybe 80% complete on the 3D model, whereas I am 99% complete on the schematic diagrams. I still have to route the 9v and 24v DC lines, and I suppose there will be some 12v DC in there too for the ventilation boost fans and the pumps in the showers for the wastewater heat recovery. I hope to get those done this coming week, and then the 3D model will more or less match the schematics and that’ll be another chore crossed off.

At some point the builder will produce a diagram of set out points for my surveyor, and then we can get service popups installed and the site ready for the builder to install the insulated foundations. That’s a while away yet I suspect. Watch this space!

What’s coming next?

I continue to execute my remaining A4 page of long standing chores of course, and I likely have a few more months left in those. I have an ISO WG14 standards meeting next week – none of my papers are up for discussion so it shouldn’t be too stressful, and if I’m honest I find the WG14 papers better to read than WG21 papers, so it’s easier to prep for that meeting. I have wondered why this is the case? I think it’s because I know almost all the good idea papers won’t make it at WG21 but they’ll take years and endless revisions before they make that clear, whereas at WG14 you usually get one no more than two revisions of papers which won’t make it. Also, I personally think more of the WG14 papers are better written, but I suppose that’s a personal thing. In any case, C++ seems to be in real trouble lately, this tech bubble burst looks like it’s going to be especially hard on C++ relative to other programming languages i.e. I think it’ll bounce back in the next bubble reinflation less than other programming languages. That’s 90% the fault of that language’s leadership – as I’ve said until I’m blue in the face why aren’t they doubling down on the language’s strengths rather than poorly trying to compete with the strengths of other programming languages – but nobody was listening, which is why I quit that standards committee last summer.

More AI

I’ve started spending a lot more time training myself into AI tooling, so much of my recent maintenance work with my open source libraries has had Qwen3 Coder helping me in places. Qwen3 Coder is a 480 billion parameter Mixture of Experts model, and Alibaba give a generous free daily allowance of their top tier model which is good for about four hours of work constantly using it per day (obviously if you are parsimonious with using it, you could eke out a day of work with the free credits). As far as I am aware, they’re the only game in town for a free of cost highest end model, as Open AI, Anthropic, Microsoft, Google et al charge significant monthly sums for access to their highest end agentic AI assistants (they let you use much less powerful models for free of cost, but they’re not really worth using in my experience). Also, unlike anybody else, you can download the full fat Qwen3 Coder and run it on your own hardware and yes that is the full 480b model weighing in at a hefty 960 Gb of data. As the whole model needs to be in RAM, to run that model well with a decent input context size you would probably need 1.5 Tb of RAM, which isn’t cheap: I reckon about €10k just for the RAM alone right now. So Alibaba’s free credit allowance is especially generous considering, and you know for a fact that they can’t rug pull you down the line once you’re locked into their ecosystem – which is a big worry for me with pretty much all the other alternatives.

I’ve only used Claude Sonnet for coding before I used Qwen3 Coder, and that was what Claude was over a year ago. Claude back then was okay, but I wasn’t sure at the time if it was worth the time spent coaxing it. Qwen3 Coder, which was only released six months ago, is much better and at times it is genuinely useful, mainly to save me having to look something up to get a syntax or config file contents right. It is less good at diagnosing bugs apart from segfaults as it’ll rinse and repeat fixes on its own until it finds one where the segfault disappears, and depending on the parseability of the relevant source code it can write some pretty decent tests for that portion of code. Obviously it’s useless for niche problems it wasn’t trained upon, or bugs with no obvious solution to any human, or choosing the right strategic direction for a codebase (which is something many otherwise very skilled devs are also lousy at), so I don’t think agentic AI will be taking as many tech dev roles as some people think. But I do think the next tech bubble reinflation shall pretty much mandate the use of these tools, as without them you’ll be market uncompetitive – at least within the high end contracting world.

Having spent a lot of time with text producing AI and only a little with video and music producing AI, I have been shoring up my skills with those too. Retail consumer hardware has been able to run image generating AI e.g. Stable Diffusion for some time, but until very recently image manipulation AI required enterprise level hardware if it was going to be any good. However six months ago Alibaba released Qwen3 Image Edit which dramatically improved the abilities of what could be done on say an 18Gb RAM Macbook Pro like my own. This is a 20 billion parameter model, and with a 6 bit quantisation it runs slowly but gets there on my Mac after about twenty minutes per image edited. Firstly, one feeds it an input image, I chose the Unreal Engine 5 screenshot from three years ago:

The original from Unreal Engine 5 (but scaled down to 1k from 4k resolution)

I then asked for various renditions, of which these were the best three:

What Qwen3 image edit AI rendered as a charcoal and pencil drawing

What Qwen3 image edit AI rendered as a watercolour

What Qwen3 image edit AI rendered as a finely hatched pencil drawing

This is simple stuff for Qwen3 image edit. It can do a lot more like infer rotation, removal of obstacles in the view (including clothes!), insertion of items, posing of characters, replacing faces or clothing, and it can add and remove text, banners, signs or indeed anything else which you might use in a marketing campaign. All that has much potential for misuse of course – if you want to edit a politician into an embarrassing scene, or a celebrity into your porn scenario of choice, there is absolutely nothing stopping you bar some easy to bypass default filters in Qwen3 image edit.

I thought I’d have it edit the original picture into one of a scene of devastation like after a nearby nuclear strike to see how good it was at being creative. This model is hard on my Macbook, each twenty minute run consumes half the battery as all the GPUs and CPUs burn away at full belt, so this isn’t a wise thing to run when you’re putting the kids to bed. Still, here’s what it came up with for this prompt:

Transform this image into a scene of devastation, with the houses mostly destroyed and partially on fire, the sky dark with smoke and burned out cars and scattered children’s toys on the grass and road.

It looks rather AI generated, but that was genuinely its very first attempt and I didn’t bother refining it to reduce the unrealistically excessive number of burned out cars, the small children it decided to add on its own, or the unphotorealistic colour palette it chose. I don’t doubt that I could have iterated all that away with some time and effort, but ultimately all I was really determining was what it could be capable of, and that’s not half bad for a first attempt for an AI which can run locally on my laptop.

Finally, up until now the best general purpose LLM I’ve found works well on my 18Gb RAM Mac book has been the 8b llama 3.1. It uses little enough memory that 32k token context windows don’t exhaust memory and reduce performance to a crawl, however a potential new contender has appeared which is a per layer quantised 30b Qwen3 instruct model to get it to fit inside 10 Gb of RAM. This model went viral over the tech news last month because it’ll run okay on a 16 Gb RAM Raspberry Pi 5, albeit with a max 2k context window which isn’t as useful as it could be. Thankfully, my Macbook can do rather more, and after some trial and error I got it up to a 20k token context window which is definitely the limit of my RAM (the 18 Gb Macbook Pro has a max 12 Gb of RAM for the GPU).

I should explain quantisation for the uninitiated: models are generally made using sixteen bit floating point weights, and for use reducing those to eight bits halves the RAM consumption and doubles the performance for only a little loss in quality and capability. Below eight bits things get a bit dicier: the four bit quantisation is a common one for retail consumer hardware, there is some loss in the model but it’s usually acceptable. The Mixture of Expert sparse models like those of the Qwen series offer a further option: they work by an initial model choosing which sub-models to use, so you can quantise each of those models individually to pack the overall model more tightly. So your 30b model, which work normally consume 18 Gb of RAM or so at four bit quantisation, can be packed into less if some of the sub-models are quantised down to two bits, but others at four bits, and so on. The aforementional viral model came in a range of quantisations, and I chose the 2.75 bit average quantised model. This fits into 10 Gb of RAM, and this is how it is possible to run a 30b model on a 18 Gb RAM total computer.

I fed both the Qwen3 30b and Llama3.1 8b models the same raw transcribed will of my ancestor Walter Irvine as I fed cloud full fat Claude Sonnet 4.5 a few posts ago, and here are their summaries of that document side by side:

| Cloud full fat Claude Sonnet 4.5 400b? 8 bit? | Local Qwen3 30b 2.75 bit | Local Llama3.1 8b 4 bit |

|---|---|---|

| (nearly instant) | 16k context: 12.54 toks/sec generation 20k context: 6.65 toks/sec (it was swapping to disc) | 16k context: 20.24 toks/sec generation 32k context: 19.47 toks/sec generation |

|

This is a comprehensive probate document from Edinburgh dated November 17, 1825, concerning the estate of Walter Irvine, Esquire, who died on January 7, 1824, at Luddington House, Egham, Surrey. He held properties in the Island of Tobago (West Indies), Luddington House in Surrey, and Denino in Fife, Scotland. Scottish Estate Inventory (Total: £5,258 6s 2d)I. Rents and Arrears Due (£4,569 13s 2½d):

II. Bond from John M. Harlaw of Kirkton: £245 5s 1d plus interest to January 1824 (Total: £262 15s 1½d) III. Bond by Road Trustees (Anstruther-Crail to St. Andrews): £150 principal plus interest (Total: £162 7s 4½d) IV. Bond by Lundenfoot Road Trustees: £50 with compounded interest from 1821-1824 (Total: £55 1s 2d) V. Proceeds from sale of wood: £33 10s VI. Balance due from Walter Dickson WS (Writer to the Signet): £174 16s 11d plus interest (Total: £174 18s 11d) Will Provisions (dated October 18, 1823)Primary Beneficiaries:Wife - Catherine Irvine (née Gordon):

Three Legitimate Daughters (each receiving £35,000):

Natural Daughter - Betsey (of London):

Other Significant Bequests:Ann Stewart (sister-in-law and widow of Stewart):

Brother's Estate:

Nephews:

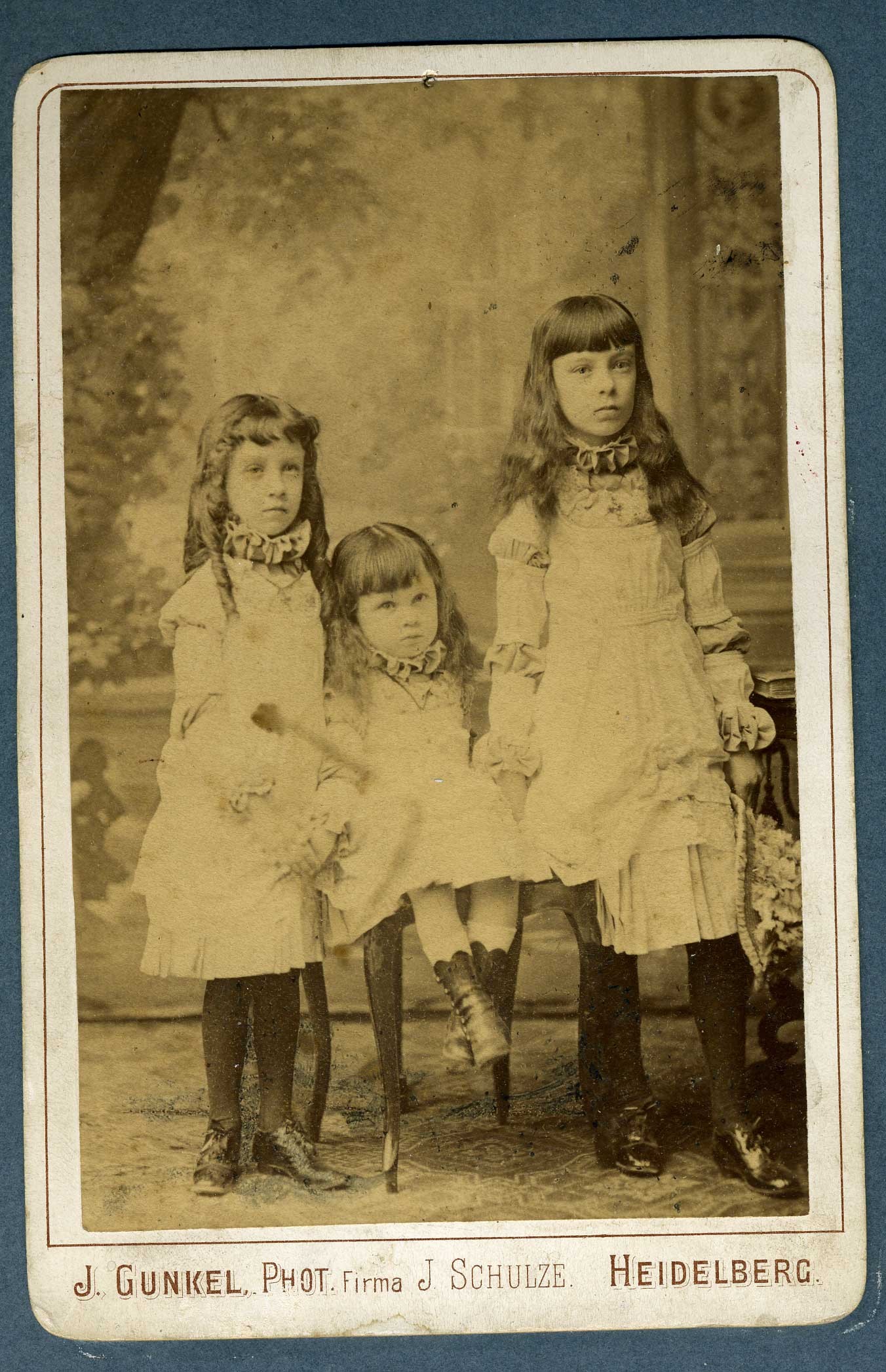

Nieces (Clara and Marian Irvine - daughters of Christopher William):

Jane Armstrong (formerly Jane Currie, wife of Francis Armstrong):

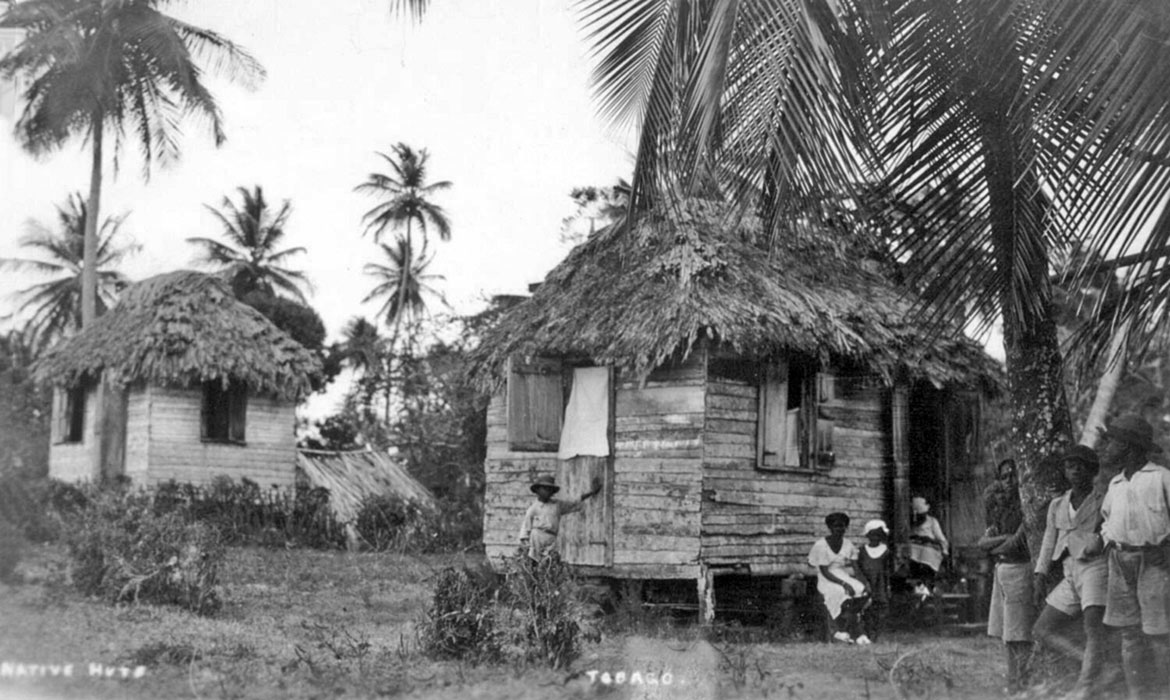

Mary (reputed daughter of a negro woman named Linda in Tobago):

Other Named Beneficiaries:

Continuing Annuities:

Trust Structure and Powers:Executors and Trustees:

Powers Granted to Trustees:

Specific Provisions for Children's Trusts:

West Indies Property:John Leith Dispute:

Plantation Management:

Scottish Property Requirements:Election Doctrine:

Residuary Estate:After all debts, legacies, and annuities paid, the residue divided equally among three legitimate daughters as tenants in common:

Elizabeth's share for her separate use with power to dispose by deed or will despite coverture. Probate Details:Proved: January 28, 1824, in Prerogative Court of Canterbury Initial Grant of Administration to:

Reserved Power for: John Hamilton, Christopher Irvine, and Thomas Bruce to apply later Witnesses to Will:

Codicil: Dated same day (October 18, 1823), adding £100 bequest to Susannah Ann Hamilton Scottish Confirmation: Presented November 17, 1825, before George Carshin Jr., Commissioner of Edinburgh, with deposition by W.R.K. Douglas confirming the inventory and will details. The document demonstrates extensive wealth with significant properties in Scotland, England, and the West Indies, careful provision for family members including an illegitimate daughter, and sophisticated trust arrangements typical of wealthy estates in the early 19th century. |

The document presents a detailed legal inventory and probate record of the personal estate of Walter Irvine, an Esquire who died on January 7, 1824, at Luddington House in Surrey. The inventory was compiled by William Robert Keith Douglas (a Lord of the Admiralty and Executor), following Walter Irvine’s will dated October 18, 1823, which was proved in the Prerogative Court of Canterbury in 1824. Key Details:

Major Assets & Liabilities (Personal Estate):I. Rents Due to Deceased:

II. Debt to John Harlan:

III. Bond on Road from Auchter and Crail to St. Andrews:

IV. Lundenfoot Road Trusts:

V. Wood Rents Due:

VI. Balance due to Walter Irvine from Walter Dickson (Account Current):

Summary of Estate Value:

Testamentary Will – Key Provisions:Walter Irvine’s will outlines complex trusts and legacies: 1. Annually to Wife Catherine Irvine:

2. Legacies to Children & Relatives:

3. Trusts & Management of Legacies:

4. Additional Bequests:

5. Power of Trustee:

Legal Verification & Probate:

Final Notes:This document confirms Walter Irvine’s death, the validity of his will, and provides a full inventory of his personal estate in Scotland (£5,258.6.2) and English holdings. The complex trust system ensures lasting financial protection for heirs, particularly daughters, with provisions for education, marriage, and inheritance.

|

The text appears to be a combination of two main documents: the last will and testament of Walter Irvine, dated October 18, 1823, and related probate documentation. Last Will and Testament The will is written in a formal style, detailing Walter Irvine's wishes for the distribution of his estate after his death. He appoints five executors to manage his estate: Catherine Irvine (his wife), William Robert Keith Douglas, Alexander Gordon, John Hamilton, and Thomas Bruce. Walter bequeaths various legacies to friends, family members, and charities. These include:

He also establishes a trust for his daughters Elizabeth Douglas, Christina Charles, Catherine Grace, and Betsy. Each daughter will receive one-third of the estate upon their marriage or death. The surviving husband will have a life interest in the legacy after his wife's death. Walter also directs that his real and personal property be held in trust for his three daughters (or any of them who survive him) to be divided equally between them as tenants in common with benefit of survivorship. He also provides for the education, maintenance, and advancement of each daughter's children. Probate Documentation The will was proved on January 28, 1824, in the Prerogative Court of Canterbury, where Catherine Irvine and William Robert Keith Douglas were sworn as executors. On November 12, 1825, Lord Wm K K Douglas (presumably a judge) granted probate to the executors. This grant refers back to Walter's deposition on the inventory of his personal estate on October 25, 1825. The probate documentation includes various affidavits from witnesses who attest that Walter Irvine was of sound mind and disposing memory when he made his will. The will is described as being written in eighteen sheets of paper with a seal attached to the final sheet. Inventory of Personal Estate An inventory of Walter's personal estate was prepared by Mr. Walter Dickson, Winter (a Signet). It lists various debts owed to the deceased, including:

The total value of Walter's estate in Scotland is estimated to be £5,258.6.2. Overall, the text provides a detailed picture of Walter Irvine's last will and testament, as well as related probate documentation and an inventory of his personal estate. |

Scroll down to see more ...

Unsurprisingly, the 400b? full fat Claude Sonnet 4.5 model does by far the best summary. The Qwen3 30b model is obviously a lot more detailed than the Llama3.1 8b model, but it also misbalances what detail to report upon and what to leave out e.g. it gets what was left to Betsey completely wrong. Llama3.1 8b produces a short summary consisting of only what it thinks are the bare essentials, and I would personally say it’s a fairly balanced summary choosing a fair set of things to report in detail and what to omit.

After a fair bit of testing, I think I’ll be sticking to Llama3.1 8b for my local LLM use. It has reasonable output, it follows your prompt instructions more exactly, and it’s a lot faster than the Qwen3 model on my limited RAM hardware. But Qwen3 did better in my testing than anything I’ve tested since Llama3.1 came out – I was not impressed by Gemini 12b, for example, which I found obviously worse for the tasks I was giving it. Qwen3 isn’t obviously worse, it looked better initially, but only after a fair bit of pounding did its lack of balance relative to Llama3.1 become apparent.

All that said, AI technology is clearly marching forwards, give it a year and I would not be surprised to see Llama3.1 (which is getting quite old now) superceded.

Returning to ‘what’s coming next?’, I shall be taking my children to England to visit their godparents in April which will give Megan uninterrupted free time to study. I expect that will be my only foreign trip this year. During February and March I mainly expect to clear all open bugs remaining in my open source libraries, practising more with AI tooling, and keep clearing items off the long term todo list. I am very sure that I shall be busy!

Word count: 2645. Estimated reading time: 13 minutes.

- Summary:

- The author has been unemployed for seven months and has made good progress on their todo list, which they had built up over eight years. They have visited people, gone to places, and cleared four A4 pages worth of chore and backlog items. Their productivity during unemployment has increased, possibly due to the lack of work-related stress. The author is now nearing completion of their todo list and plans to update their CV soon.

Friday 16 January 2026: 22:05.

- Summary:

- The author has been unemployed for seven months and has made good progress on their todo list, which they had built up over eight years. They have visited people, gone to places, and cleared four A4 pages worth of chore and backlog items. Their productivity during unemployment has increased, possibly due to the lack of work-related stress. The author is now nearing completion of their todo list and plans to update their CV soon.

I’m now into the final A4 page of todo items! To be honest, I’ve not been trying too hard to find new employment, I haven’t been actively scanning the job listings and applying for roles. That will probably change soon – as part of clearing my multi-page todo list I finally got the ‘kitchen sink’ CV brought up to date which took a surprising number of hours of time as I hadn’t updated it since 2020. And, as the kitchen sink CV, it needed absolutely everything I’d done in the past five years, which it turns out was not nothing even though my tech related output is definitely not what it was since I’ve had children. So it did take a while to write it all up.

Still, I have a few more months of todo list item clearance to go yet! The next todo item for this virtual diary is the insulated foundations design for my house build which my structural engineer completed end of last November. I’ll also be getting into my 3D printed model houses as I’ve completed getting them wired up with lights.

To recount the overall timeline to date:

- 2020: We start looking for sites on which to build.

- Aug 2021: We placed an offer on a site.

- Jul 2022: Planning permission obtained (and final house design known).

- Feb 2023: Chose a builder.

- Feb 2024: Lost the previous builder, had to go get a new builder and thus went back to the end of the queue.

- Aug 2024: First draft of structural engineering design based on the first draft of timber frame design.

- Nov 2024: Completed structural engineering design, began joist design.

- Mar 2025: Completed joist design, began first stage of builder’s design sign off.

- Jun 2025: First stage of builder’s design signed off. Structural engineering detail begins.

- Nov 2025: Structural engineering detail is completed, and signed off by me, architect, and builder.

- Dec 2025: Builder supplies updated quote for substructure based on final structural engineering detail (it was approx +€20k over their quote from Feb 2024).

- We await the builder supplying an updated quote for superstructure … I ping him weekly.

We are therefore on course to be two years since we hired this builder, and we have yet to get them to build anything. As frustrating as that is, in fairness they haven’t dropped us yet like the previous builder, and I’m sure all this has been as frustrating and tedious for them as it has been for us. As we got planning permission in July 2022, we are running out of time to get this house up – the planning permission will expire in July 2027 by which time we need to be moved in.

All that said, I really, really, really hope that 2026 sees something actually getting constructed on my site. I was originally told Autumn 2024, we’re now actually potentially Autumn 2026. This needs to end at some point with some construction of a house occurring.

The insulated foundations detail

I last covered the engineer’s design in the June 2025 post, comparing it to the builder’s design and the architect’s design. Though, if you look at the image below it’s just a more detailed version of the image in the August 2024 post. Still, with hindsight, those were designs, but not actual detail. Here is what actual detail looks like:

We have lots of colour coded sections showing type and thickness of:

- Ring beams (these support the outer concrete block leaf and outer walls)

- Thickenings (these support internal wall and point loads)

- Footings (these support the above two)

As you can see, the worst point load lands in the ensuite bathroom to the master bedroom – two steel poles bear down on 350 mm extra thick concrete slab with three layers of steel mesh within, all on top of an over sized pad to distribute that point load. This is because the suspended rainwater harvesting tanks have one corner landing there, plus one end of the gable which makes up the master bedroom. The next worst point loads are under the four bases of the steel portal frames, though one is obviously less loaded than the other three (which makes sense, only a single storey flat roof is on that side of that portal frame). And finally, there is a foot of concrete footing – effectively a strip foundation – all around the walls of the right side of the building, this is again to support the suspended rainwater harvesting tanks.

Let’s look at a typical outer wall detail:

Unsurprisingly, this is identical to the standard KORE agrément requirements diagram I showed in the most recent post about the Outhouse design (and which I still think is overkill for a timber frame house load, but my structural engineer has no choice if we are to use the KORE insulated foundations system). Let’s look at something which we haven’t seen before:

This is for one of the legs of the portal frame next to the greenhouse foundation, which has 200 mm instead of 300 mm of insulation. Much of the weight of the house bears down on those four portal frame legs, so unsurprisingly at the very bottom there is firstly a strip footing, then a block of CompacFoam which can take approx 20x more compressive load than EPS300, then 250 mm of double mesh reinforced concrete slab – you might see a worst case 3000 kPa of point load here, which is of course approximately 300 metric tonnes per sqm. I generally found a safety factor of 3x to 10x depending on where in their design, so I’m guessing that they’re thinking around 100 metric tonnes might sometimes land on each portal frame foot.

Actually, I don’t have to guess, as they supplied a full set of their workings and calculations. I can tell you they calculated ~73 kg/sqm for the timber frame external wall and internal floor, and ~42 kg/sqm for internal racking walls. The roof they calculated as ~140 kg/sqm, as I had said I was going to use concrete tiles. Given these building fabric areas:

- 150 sqm of internal floor = 11 tonnes

- 393 sqm of external wall = 29 tonnes

- 270 sqm of roof = 48 tonnes

… then you get 88 tonnes excluding the internal racking walls, most of which bear onto the concrete slab rather than onto the portal frames. So let’s say a total of 100 tonnes of superstructure. As you can see, even if the entire house were bearing on a single portal frame leg instead of across all four legs, you’d still have a 3x safety margin. With all four portal frame legs, the safety margin is actually 12x.

Speaking of heavy weights, we have a hot 3000 litre thermal store tank to support where the foundation must deal with ~80 C continuous heat. EPS doesn’t like being at 80 C continuously, so if we had hot concrete directly touching the EPS, we were going to have longevity problems. This did cause some thinking caps to be put on by engineer, architect and myself and we came up with this:

As you see, we introduce a thermal break between the hot concrete slab and the EPS using 50 mm of Bosig Phonotherm, which is made out of polyurethane hard foam. Polyurethane is happy with continuous temperatures of 90 C, and it drops the maximum temperature that the EPS sees to around 70 C. It is also happy getting wet, it can take plenty of compressive loads, and its only real negative is that it is quite expensive. Unfortunately, I’m going to have to use a whole bunch of Bosig Phonotherm all around the window reveals, but apart from the expense it is great stuff to work with and it performs excellently. To the sides, the thermal break is the same 30 mm Kingspan Sauna Satu board which is the first layer of insulation within the thermal store box: Satu board is a specially heat resistant PIR board, and as it is expensive we use conventional PIR board for most of the thermal store insulation. Similar to the Bosig Phonotherm, the Satu board brings the temperature down for the outer PIR, also to about 70 C.

Finally, let’s look at some detail which also delayed the completion of this insulated foundation design: the insulated pool cavity:

The challenge here was to maintain 200 mm of unbroken EPS all the way round whilst also handling the kind of weight that 1.4 metres of water and steel tank and the surrounding soil and pressure from the house foundations will load onto your slab. Solving this well certainly consumed at least six weeks of time, but I think the job well done. Given that my engineer did not charge me more than his original quote – and he certainly did more work than he anticipated at the beginning – I cannot complain. The quality of work is excellent, and I’m glad that this part of the house design has shipped finally.

3D printed model house

Eighteen months ago I taught myself enough 3D printing design skills that I was able to print my future house using my budget 3D printer. It came out well enough that I ordered online a much bigger print in ivory coloured ASA plastic, which I wrote about here back in June 2024. In that post, I demonstrated remarkable self knowledge by saying:

When all the bits arrive, if a weekend of time appears, I’ll get it all cut out, slurry paste applied where it is needed, wired up and mounted and then put away into storage. Or, I might kick it into the long touch as well and not get back to it for two years. Depends on what the next eighteen months will be like.

Writing now eighteen months later … indeed. After the model arrived, I then ordered a case for it which I wrote here about in August 2024, where I wrote:

The extra height of the case will be used by standing each layer of the house on stilts, with little LED panels inside lighting the house. You’ll thus be able to see all around the inside of the model house whilst standing instead the actual finished house. It may well be a decade before I get to assemble all the parts to create the final display case, or if this build takes even longer I might just get it done before the build starts. We’ll see.

I was being overly pessimistic I think by this point, though had the house build started sometime between then and now maybe I’d be too occupied working on the house to work on a model house. Anyway, all that’s moot, because witness the awesomeness of the fully operational house model:

From all sides:

And with the case on:

To make sure there would be no thermal runaway problems which might melt the house etc:

Looks like on full power after twenty minutes with the case on we get a maximum 54 C or so. Now, it being winter here, the surroundings are cold so that could easily be 10-15 C more in summer. But it wouldn’t matter – ASA plastic has a glass transition temperature of 100 C. And, besides, we’ll never run the lights at full power, they’re too bright so they will be at most on a 33% PWM dimming cycle.

The lighting is provided by these COB LED strips with integrated aluminium heatsink made by a Chinese vendor called Sumbulbs. You can find them in all the usual places and you can buy a dozen of them for a few euro delivered. They are intended to be soldered, and you can see my terrible soldering skills at work:

The layers of the house are separated by cake stands:

And with the case on:

And finally, showing both the cake stands and the COB LED light strips:

You’ll note that not all the COB LED strips have the same brightness. With hindsight, this is very obvious – that darker one to the bottom right clearly has only a few LEDs spaced well apart, whereas the top roof ones especially are densely packed LEDs. I mainly chose the strips at the time based on what would fit the space available rather than any other consideration. Anyway, I don’t think it matters hugely, but perhaps something to be considered by anybody reading this.

In case you’re wondering, total costs were:

- €150 inc VAT inc delivery for the 3D print.

- €200 inc VAT inc delivery for the cases (this includes the small case below).

- €20 inc VAT inc delivery for the cake stands, most of which I did not use.

- €10 inc VAT inc delivery for the COB LED strips, and I also only ended up using a few of these and I have loads spare.

So I’d make that about €350 inc VAT inc delivery, plus a whole load of my time to design the 3D print and do all the wiring. I intend to mount it into the ‘house mini museum’ which will memorialise how the house was designed and built.

3D printed model site

You may or may not remember that using my own personal 3D printer I had also printed the whole site. This isn’t very big as it’s limited by the 20 cm x 20 cm maximum plate on my personal 3D printer, but it gives you a good sense of how the house and outhouse frame the site. Anyway, I started by inserting 4 mm LEDs throughout the house and outhouse:

I also designed a little stand for ‘the sun’ at the south side of the site, which is another Sumbulbs COB LED strip with integrated aluminium heatsink (the same used throughout the big house model):

And turning up ‘the sun’ to full power:

With the roofs off:

And finally the thermals:

Once again, no issue there even on full power with the case on after twenty minutes.

This model certainly cost under €50 inc VAT inc delivery, maybe €40 is a reasonable estimate. Most of the cost was the case, and of course my time.

My plan for this model is that it will be in daylight when it is daylight outside, and go to night time when it is night time outside, and it’ll also be mounted in the mini house museum. Should be very straightforward. Just need to get the real house built.

I continue to ping the builder weekly, and maybe at some point he might pony up a final quote and then it’ll be off to the races after two years with him, and three years waiting for builders in general. Here’s hoping!

Word count: 25032. Estimated reading time: 118 minutes.

- Summary:

- A detailed historical narrative spanning 1815-1915 is presented, tracing how one branch of the author’s family lost their fortune derived from Caribbean sugar plantations. The decline of landed aristocracy through economic shifts, policy changes, and poor investment decisions is examined. Parallels are drawn between that era and current times, with speculation offered about AI’s role in creating a new technological aristocracy and its societal implications.

Friday 9 January 2026: 00:26.

- Summary:

- A detailed historical narrative spanning 1815-1915 is presented, tracing how one branch of the author’s family lost their fortune derived from Caribbean sugar plantations. The decline of landed aristocracy through economic shifts, policy changes, and poor investment decisions is examined. Parallels are drawn between that era and current times, with speculation offered about AI’s role in creating a new technological aristocracy and its societal implications.

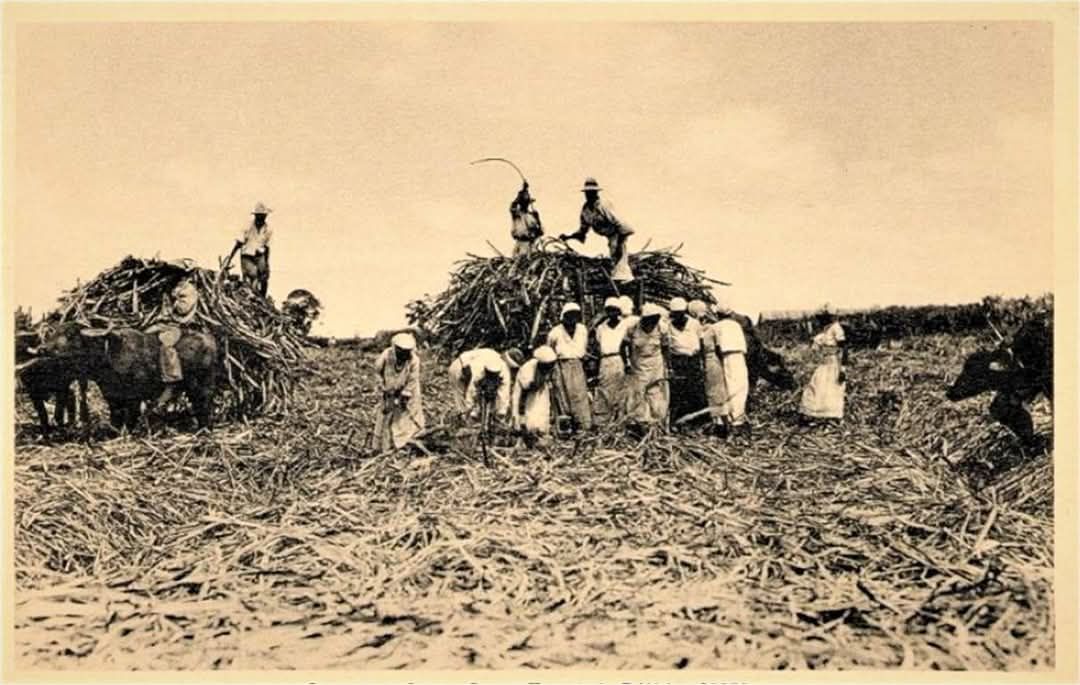

This essay is about how my personal family, especially one branch of it, got from where they were at the beginning of the Second British empire, which began after the defeat of Napoleon in 1815, until now. The period 1815-1915 is peculiarly similar to the period 1945-2045 (so far) with a remarkable repetition of resonances, so what keeps drawing me back to thinking about that earlier period is due to me thinking hard about what is to come next for us right now. Yes, we know the broad sweep of history from the history books. But in terms of knowing what to do for my family now and next, personalising history into the context of my historical family from the 1815-1915 period seems worth doing.

Also, to be honest, AI has just very recently revolutionised parsing

historical records. I had always assumed that record keeping before the 20th

century just wasn’t very good. In fact, record keeping since 1750-1800

onwards has been surprisingly complete in most of the western world, it’s

just that the data was locked in hard to parse records distributed across

many small places. AI in the last few years has become better than certainly

this human’s ability to decipher ancient hand writing, which has turned all

the digitised old records into far more useful resources because now the

text extracted from them is accurate, but also because AI can then summarise

that extracted text into comparable text corpora. Also, there has been a

concerted effort to digitise things like headstones in cemeteries, and with

all that information sites like ancestry.com

have made it surprisingly

straightforward to assemble your entire complete family tree going back to

1750 or so. To that end, here are the sixty-four sixth generation away

people who contributed genes to my children (and my thanks to my Aunt Ruth

and Megan’s mother Sara for getting me started with creating this tree),

and my apologies in advance if your web browser doesn’t yet support the

experimental scroll-initial-target:nearest CSS feature to initially

scroll this very wide PNG to centre on page load (if it doesn’t, you’ll

need to scroll this image right to reach the centre):

There are five people missing – this is because ancestry.com wants me to pay them money to establish those, and because I don’t want to pay them money, I’ll be leaving those empty until the ‘free ancestry.com lottery’ gives me them for free (to drive engagement, they drip feed you free data each day to make you log in frequently). But I can tell from their advertising being pushed at me that they have the data not just for those missing five people, but also certainly their parents and possibly their grandparents. So, given enough time or paying ancestry.com money, I think I could create a reasonably probably complete list of the 256 people which make up the eighth generation away ancestors. That’s pretty good – that’s everybody from about 1750 until now, so 275 to 300 years, barring a few likely mistakes where I’ve misconnected children to parents. Within that list, I know birth, death and marriage dates, the number of children and number of spouses, but I often also know occupations, and a surprising amount about internal and international travel (before the 20th century, immigration logs were public, but also children’s birth certificates state location).

The picture which emerges from our complete set of ancestors is very much representative of European colonial imperialist expansion during that period. As that will also set the stage prior to when this essay will begin in earnest from 1815 onwards, it is worse briefly recounting.

What our ancestors were doing towards the end of the First French Empire (before 1815)

What AI thinks British Colonial America looked like before independence

Megan’s side of the family before 1815

In terms of percentage of new arrivals relative to the existing population, the United States has had three historical bursts of immigration:

- 1600-1770 (pre-independence), after which immigration was near zero until …

- 1830-1900 much of which was driven by famine in Europe followed by the Long Depression, after which …

- 1950-2010 was driven by the United States replacing Britain as the dominant world economic hegemon, and therefore attractive to global economic migrants.

You can read lots more detail at the Wikipedia page on the history of immigration into the United States, and no I didn’t mistype the third burst ending in 2010 – most US citizens currently think floods of immigrants are entering right now, but the statistics show that the third burst did indeed peak in 2010 and since then ever fewer people relative to the current population have relocated to the United States. If history repeats, it’ll be another fifty years before the next burst begins.

Interestingly, most of my wife’s ancestors were already in the US when it was still a British colony, so they arrived during the pre-1770 immigration period from what appears to be mostly British Presbyterian stock (who at the time were being persecuted for their religious beliefs). That was surprising to me and to her – she had thought herself comprised more of post-1830 immigrants. Yes there are a few of those in her bloodlines, but the bulk is undoubtedly the many young British people who sold themselves into indentured labour to pay for transport to the colonies with the hope of better economic opportunities. After their indentured contract was served, they generally purchased farmland typically around Maryland, Carolina and Virginia where cash crops for export were grown. One thing very noticeable on Megan’s side of the tree is the number of children her ancestors had who survived into adulthood compared to my side of the tree – also, whereas a majority of the women my side of the tree died in childbirth, on her side I’d estimate two thirds or more made it into relatively old age. This suggests that while life was hard in the United States, it was worse in Europe, at least for our ancestors. Another thing which stands out is that in my side of the family, there was always the ‘youngest son’ or ‘junior branch’ problem because the eldest sons would get all the inheritance, so special effort and arrangements had to be made to find livelihoods and wives for the youngest sons. In contrast, in Megan’s side of the family all the children bought farmland no matter how senior or junior apparently with relative ease – I would assume that unlike in Europe where all the land was taken and very little was available for purchase, in the US at that time you simply took new land from the native inhabitants which made it affordable to all children.

Indeed, the state of Indiana is so named because it was originally part of the Indian Reserve of 1763 (about which you can read lots more here). That area underwent a period of violent instability caused by British and American armies fighting each other and the native tribes up until 1812 when the British yielded, and the US Congress sought to ensure a bulwark against any further instability going forwards. They made available lots of farmland on the now disentailed Indian Reserve at low prices, and a majority of Megan’s ancestors appear to have taken up the offer – there is a noticeable movement all at once from all over then British Colonial America of all the generations whose children and grandchildren were yet to marry each other to lands within or nearby the state of Indiana. And there they have remained ever since, with surprisingly limited relocation until very recently, mainly growing food or providing services to farmers such as religious guidance (mainly Lutheran), blacksmithing or dentistry for about two hundred years. As far as I can tell from this vantage point, there wasn’t a huge disparity in economic outcomes across all Megan’s ancestors – they all appear to have started from roughly similar circumstances, some were a generation or two earlier to wealth and education and moving up the value chain than others, but they all moved with remarkable uniformity relative to my ancestors who are far more a hotchpotch. I guess that’s what the American Dream once was: most bar the dispossessed native inhabitants had things, on average, better than their ancestors for enough generations it became an expected truism.

My side of the family before 1815

Megan’s ancestors were remarkably uniform, whereas mine are quite the smorgasbord. Like Megan’s ancestors, one quarter of mine on my Presbyterian mother’s father’s side at that time were also low church Protestants of varying kinds. I can see brothers and sisters of those ancestors emigrating to America and the other British colonies, however obviously my specific ancestors did not. As far as I can tell, the reasons mine stayed were because they were the eldest or second eldest son and thus had an inherited livelihood, or else they married an eldest or second eldest son. As with Megan’s ancestors, men tended to reach their seventies and the women who didn’t die in childbirth usually made their sixties. Subjectively speaking, it looks like life around 1800 was a bit better for the Americans than for this quarter of my ancestors, but only by a bit.

A second quarter on my Catholic mother’s mother’s side did not do well, at least as far back as I can currently see. My grandmother’s father, a gardener for a priest, died in his forties. Even before that during the 19th century, they were lucky to make it into their fifties before death, and life was hard – I can see they had to share a small house with another poor family in the 19th century. They still had it better than many on the island of Ireland at the time – you didn’t want to be a sub-sub-tenant farmer as an example, they were mainly the ones who died from starvation in the potato famine. They were so poor that there were no choices other than to starve to death – emigration was an option only for the slightly wealthy upwards. This branch of my ancestors weren’t that poor, they were maybe one or two rungs above, they definitely could afford an occasional steamer to America – as evidenced by the location of my great grandfather’s demise (San Francisco).

The next quarter were mostly Catholic on my father’s mother’s side. They were a mix of middle class Catholic French Belgians and middle class Protestant probably Methodist English from Suffolk, with jobs such as tailor, composer or teacher. They generally had long healthy lives and had sufficient wealth that all of their relatively few children (by the standards of that time) who reached adulthood could be well educated and given a good start in life. Indeed if you squint a little (childhood mortality was high back then), the children born around 1800 to that quarter of my ancestors had a similar lifestyle to middle class people today – they travelled quite a bit for pleasure, attended leisure activities such as orchestral recitals, and clearly felt a security about life and living which the previous two quarters of my ancestors never knew. The only major obvious difference is rates of childhood mortality, otherwise they look surprisingly contemporary in terms of behaviour, pastimes and inclinations, at least from this distant perspective.

What AI thinks is 'aristocracy'

The final quarter is by far the best documented – mostly due to the work of my Aunt Ruth thank you! – but also because they were famous at the time due to being either aristocracy or wealthy, and hence lots of ink got spilled about them in lots of places over the centuries in everything from wood carved caricatures to tax receipts. Back in 1800, two of my ancestral lines combined, one was aristocratic, well regarded, and sometimes wealthy but generally plagued with money troubles; the other until now we had thought were wealthy industrialists my ancestors had married into, but I now think were actually also ruling elite but recently dispossessed of their power which caused them to take a big gamble which paid off, but at the expense of thousands of lives – all the other bloodlines they married into were usually middle class with good jobs like the previously described Catholic quarter – though generally Anglican/Methodist/Lutheran Protestant rather than Catholic. Of the aristocratic bloodline, let us begin with my specific ancestor Sir William Douglas (1730-1783) who was a Member of Parliament for Dumfries between 1768 and 1780, and you can read his Hansard history here. Before 1833, most members of Parliament for a given constituency were chosen by the big landowners of that constituency, which in this case was primarily Charles Douglas, Duke of Queensbury. To be clear, a Member of Parliament back then did NOT work for the people, they worked for and had their salary paid by the big landowner, and they had to do what they were told or they were replaced. The Duke was very keen on my ancestor William who also ran his estate for him as a manager, and left £16,000 to him on his death in 1778, an enormous sum (about £39 million in 2024 sterling by the wages deflator).

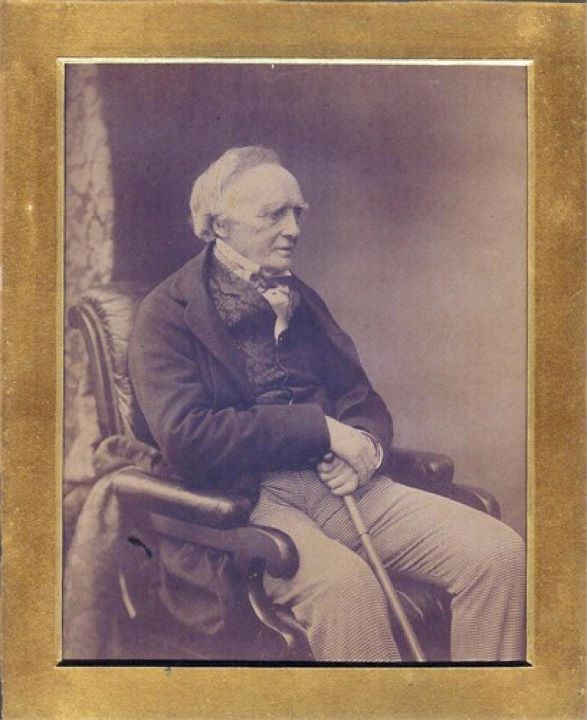

Sir William Douglas (1730-1783)

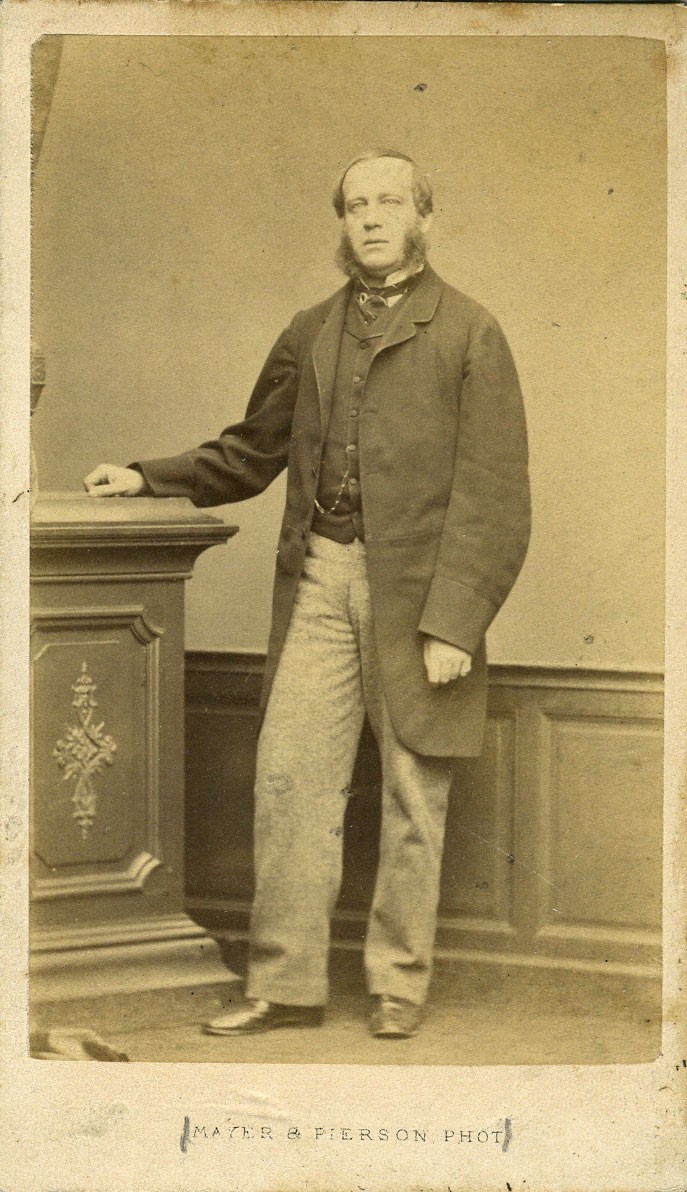

William until that point had never been wealthy. His father, Sir John Douglas, also Member of Parliament 1741-1747 but for Dumfriesshire which neighbours the Dumfries constituency, had died in disgrace in debtor’s prison, which was almost never used on members of the aristocracy except in the very most severest and recalcitrant cases (he also got himself locked in the Tower of London pending execution for High Treason against the Crown in 1746, but I digress). William and his siblings, having had no other members of family willing to take them in, ended up being raised with his tutor’s family which was not a wealthy upbringing, and William had to take a job which was as manager of the Duke’s estate and succeeding his father’s role Member of Parliament for that constituency – so he worked for a living and had done so all his adult life. Unfortunately, so overjoyed was he on receiving such a life changing sum of money after a lifetime of severe money issues, he had a fit of apoplexy and died upon receiving the good news. That inherited fortune then formed the basis for his eldest sons’ livelihoods, which meant that my specific ancestor Lord William Robert Keith Douglas (1783-1859) who was the youngest son (and therefore without a livelihood) therefore also needed to take a job like his father. By the time he was an adult, his patron who had inherited the previous Duke’s lands and titles was Walter Francis Montagu Douglas Scott, Duke of Buccleuch and Queensbury, the richest man in Scotland (an actual millionaire in the money of the time, the current Duke who is that Duke’s lineal descendent remains today the richest man in Scotland owning about half the total land area). This Duke did not like my ancestor as much as the previous Duke and often grumbled publicly about him, however he still sent him to Parliament for Dumfries between 1812 and 1832 after which the rotten borough system was abolished, and Hansard has written up an excellent summary of his political life here.