|

Summary |

|

| 1st July 2006 |

3D graphing |

| 7th July 2006 |

Cognitive Economic Modelling Notes 1 |

| 13th July 2006 |

Cognitive Economic Modelling Notes 2 |

| 17th July 2006 |

Cognitive Economic Modelling Notes 3 |

| 25th July 2006 |

Cognitive Economic Modelling Notes 4 |

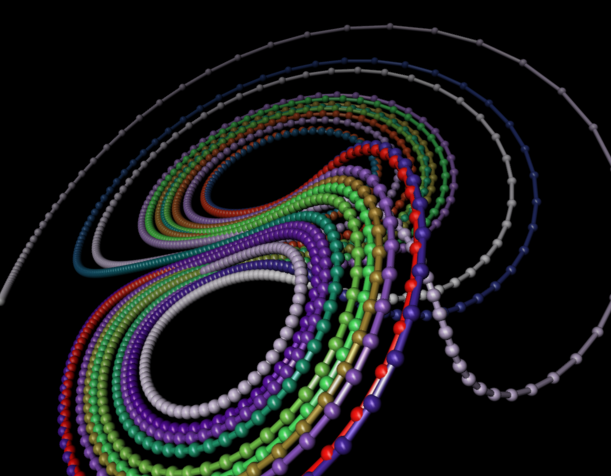

Saturday 1st July 2006: 3.35pm. For once, I think my summer coding might actually be of interest to non-computery people! Three weeks or so in, I have me a fancy 3D graph plotter (though I've only been working on this particular bit for a week):

[Click on it to show it full size]. Now I don't know about you, but I

think that's pretty damn cool! It's the famous Lorentz chaotic weather

equations which tend to characterise as a two point attractor. The whole

point of this new functionality is to be able to visualise chaotic

attractors seeing as they are so prevalent in Economics (as well as

everything else) so before I can start any Economic model, I needed 3D and

2D graphing facilities in TnFOX. I did try the

Visualisation Toolkit, but

it was slow. What

you see above is only half the story - you can rotate and zoom that

picture above in real-time with the mouse - if you have a suitably new

enough machine (last four years). The graph also can update itself as the

data changes ie; be animated which is also cool, especially if you speed it

up. In fact, you can draw far more detail than above which is only 2000

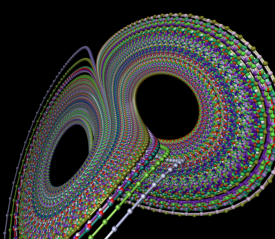

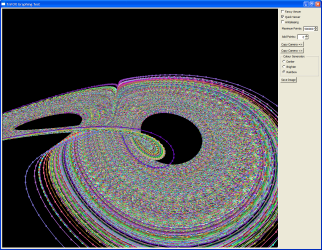

points - on the right, there are [also clickable for full size] examples of

100,000 points (in pretty mode like above) and on the left 500,000 points

(in fast mode) though at those numbers you can't quite rotate it in full

detail in real-time. This is more than enough detail which is good, as I'll

be needing the horse power for my biosphere model equations which I have

here on the back of an envelope. If you'd like to play with these graphs on

your own computer, you

can download the program here (897Kb).

What

you see above is only half the story - you can rotate and zoom that

picture above in real-time with the mouse - if you have a suitably new

enough machine (last four years). The graph also can update itself as the

data changes ie; be animated which is also cool, especially if you speed it

up. In fact, you can draw far more detail than above which is only 2000

points - on the right, there are [also clickable for full size] examples of

100,000 points (in pretty mode like above) and on the left 500,000 points

(in fast mode) though at those numbers you can't quite rotate it in full

detail in real-time. This is more than enough detail which is good, as I'll

be needing the horse power for my biosphere model equations which I have

here on the back of an envelope. If you'd like to play with these graphs on

your own computer, you

can download the program here (897Kb).

My inspiration for how these models should look like comes from this web page. I find it astonishing that the pretty pictures they have there took a farm of computers back in 2000 and here we are six years later and you can get close in real-time on one low-end computer. The only missing effect actually is shadows - these take a while to calculate, and besides my vector geometry knowledge is extremely rusty nowadays (it took me a day to figure out how to rotate one vector so it points the same way as another vector) so I left them out.

The next thing is 2D graphs - these are pretty easy and won't take long - and then sometime early next week I'll begin the model itself.

So, what else has been happening? Not a lot - Johanna and I have stopped talking about last semester, and are now talking about more normal everyday stuff. Which is good. Had quite a few emails off quite a few people last few days and it's good to hear from various people, even if often it's commiserations on breaking up with Johanna which hasn't actually happened! The weather has been so-so but basically the summer is already zipping away quite quickly. I shall be heading to Sweden for end of July for a few days, then I should be in London as I usually am for the end of August to visit various people around there.

Anyway, I'm pretty chuffed at the above. I hope you all like them too. Be happy!

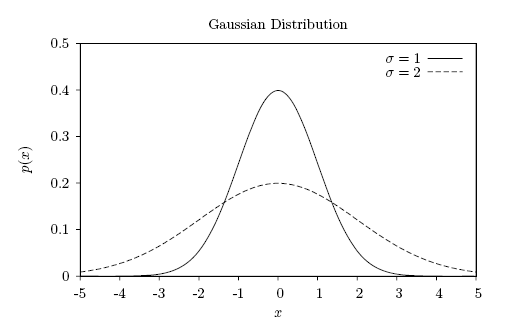

Friday 7th July 2006: 12.47pm. This week I started my Economic model and my first instinct was to model traits which were actualised by a normal curve. Just for reference, this is a normal curve:

... which is given by the equation:

![]()

Put simply, traits tend to bunch around the mean but with a non-linear

likelihood of extremes. Then two nights ago I had an unpleasant dream which

consisted of statistical equations and graphs and my coffee dates M-, S-, K-

and Johanna. Thankfully, Johanna's comment when I told her that she should

have sex with a normal curve in my dream was not realised!

![]()

I realised that my subconscious was telling me I was on the wrong track. I took it to mean that the normal curve should be the result of my model, not the source. So I took a few more days of thinking.

In the meantime, I was lucky enough to have dialogues with V- and K- and this clarified what I should be doing. Previously in this diary I have written of my view that all life solves problems - it is its primary process. All life conducts a series of tests, most of which fail. Those that are successful become habit and improve the mental model of reality that life has. As trial & error is costly because it fails so often, most life copies successful strategies from other life which discovered it first - for most life, this is by embodiment in its genes, but for some life like us that happens very slowly and so we embody strategies by mental acquisition. Mental representation is done by others reducing the new strategy down into some simple rules which are more easily communicated. They become units of perception & action eg; if my habitual brand of beans is out of stock, choose the most recognised brand. If this protein marker is recognised, release hormone. You could call these perception & action units a theory, or a habit, or even a virus.

All life continually retests these strategies by occasional slight variation from habit. If a slight variation is found which is superior, it is an incremental innovation and quickly is copied by other life. Nevertheless, very rarely, step change innovations are found and especially when the community is under stress, are adopted en-mass. Older life finds it harder to change, and so new instantiations (children, start-up firms) which fully embody the latest knowledge of their subwebs are better placed to actualise the innovations.

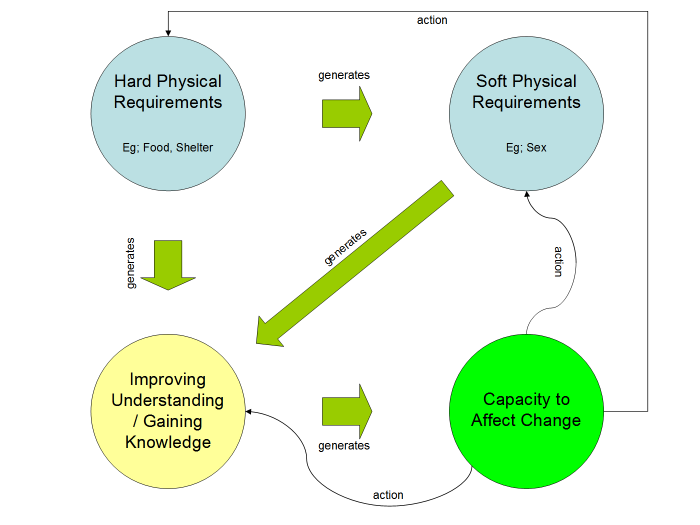

As I have also written previously, all life shares some common drives. I have reduced this to four: (1a) Physical Requirements (eg; food, shelter) (1b) Sex (2a) Improving Understanding/Gaining Knowledge and (2b) Capacity to Affect Change. All four require a continually changing mental representation of their environment to be actualised. All four are in order, in that the latter drives with their subgroups cannot be actualised without a minimum in a previous eg; you cannot have sex if you are too hungry, and you cannot have capacity to cause good change without having experience to indicate what to do (note that you can take rash random action hoping to strike lucky if sufficiently desperate).

All drives require security of supply, or put another way, that the organism is in place to process these. Therefore, organisms want somewhere comfortable to live with a regular supply of food. They want a constant supply of mates. Through these previous, they improve their understanding of the world which allows them to take action to cause change. These changes improve their security of supply of all the previous, which in turn lets them create the world they live in, thus looping back to the start. Whether by sedimentation to create coral or limestone, or I creating a pool of coffee dates to interact closely with on a continual basis, or even I being in a positive mood rather than negative, we create the world we live in. How well we do in turn makes us happy or sad. Note you can be happier if you get food when there is no security of supply than when you have everything in abundance but struggle with causing good change - after all, the feedback loop is much simpler.

So, how do I model this? Well for a start, there can be no individuals. Only ants in an ant colony. And in order to not ignore externalities, you can't view any life as being any different from any other life so humans must behave the same as everything else. This greatly reduces the uniqueness of humans on the planet which I think important. However that said, any realistic model of the biosphere must have 99.9% of the life being non-human and well, that's computationally infeasible.

Therefore, given the shortness of the summer break, I think I will model just humans in an incredibly simplified environment. There will be oil, trees, cows and grain. You get a choice of farming your land by either of the latter three, the first by action but the former by inaction (ie; any unfarmed land simply becomes trees). You can use oil to increase the efficacy of farming, so you effectively can eat oil. You can feed your cows trees (at a low efficacy) or grain (in order to grow more cows, but at the sacrifice of trees and grain for humans). You can use cows or wood or oil to increase speed of transport in rising order.

In order to balance the model given its extreme abstraction, you have to take some guesses eg; how much model grain does a model cow require? I'll try basing it on Joules, but the reality is that I'll probably not be able to use equations from Physics. Nevertheless, I'll try - it may be that the relations hold, but not the constants.

However what about the effects of human action on the world? I'm going for a fairly linear model here, in that action comes in two forms - good for the environment (increases diversity) or bad (decreases diversity). Trees have the most diversity, grain less, cows less and settlements least. However, knowledge can lower the impact of human actions on diversity eg; if we reused all those faeces we throw away each year as fertiliser, or designed houses which were also forests, you could almost eliminate the impact. Losing diversity is basically the same as getting old, so productivity of everything drops and sickness more easily sets in.

It is quite legitimate that one group of humans generates a lot of bad action while another group converts that into good action. So, one group could pollute and another convert that pollution into diversity, or least reduce its damage. A simple example is waste.

What determines what group does what will not be programmed in - the price mechanism will determine it. If rubbish cost a thousand times what it does now, you'd have people paying you to take it away. Of course, you'd usually not be so happy then to throw it away! It will also determine who studies for what, where creativity is to be applied. It's basically a representation of the human value system where the big difference between now and the future is that a lot of items are very much mispriced eg; our air and seas are considered nearly-free dumping grounds.

In theory, you should be able to remove oil and see a pre-industrial revolution pattern of successively more rapid and long lasting civilisations (due to embodied knowledge increasing over time eg; through writing). With oil, you should see a take off fitting the profile of the industrial revolution leading eventually to collapse. In theory, you then change the pricing mechanism and watch the same system not collapse, maybe even exponentially improve if knowledge and innovation were placed as central aims for the population.

Right, off I go and make a start, or else take a break because my head hurts after thinking up all this stuff. But I think I'm getting there! Be happy!

Thursday 13th July 2006: 11.10am. [Graph added on Friday] Once again, for the last few nights I have been waking up after unpleasant dreams. Still something wrong with my model? I think so.

Once again, the dreams contained M-, S-, K- and Johanna and they were even more sexual than the first lot last week - though luckily this time, no statistics! Like on previous nights, I started waking up at around 6am onwards but falling asleep again for shorter and shorter periods with in the case of this morning not falling asleep again at all after 8am. My alarm went at 8.30am.

My model has progressed well - I have a state container which can latch its current state into a history from which the next iteration can calculate the new current state. I have an optimised state container which allocates all states in consecutive memory so the processor's SSE unit can run as quickly as possible with parallelised processing. However, I have a problem with my basic ecosystem.

If you remember, I had an arbitrary space with land proportioned into trees, grain and buildings with cows and oil. My problem became how these worked and my initial solution was fairly linear with grain and buildings eating into trees. Thing is, how do you calculate its health?

This is important, because the same mechanism must apply to the biosphere as humans - because of the rule that all life must be treated equally in the model. And I think the dreams were telling me something I wasn't taking into account - namely, precisely how do actions affect a system, and therefore its health?

With the current linear incarnation, the optimum state for the biosphere is all trees. Therefore, all human action is necessarily destructive. Therefore, the health of the biosphere will always decline as humans rise. This isn't correct - it's too simplistic - in reality, some human action is destructive but a majority is constructive - the health of an ecosystem in general rises due to human input.

Take a plot of land. It might contain entirely trees, which is the best the ecosystem can achieve. Now say a human clears the trees and puts down grain. Now the ecosystem is below optimal true. However, what if the human adds irrigation systems and tends the grain in such a way as to make it grow and contribute more to the ecosystem than the trees alone could do? Put another way, if you add continual human interaction, an ecosystem can become healthier than it could alone. Think of it - the more plentiful water supply could allow all sorts of life to flourish in that field which without was not possible.

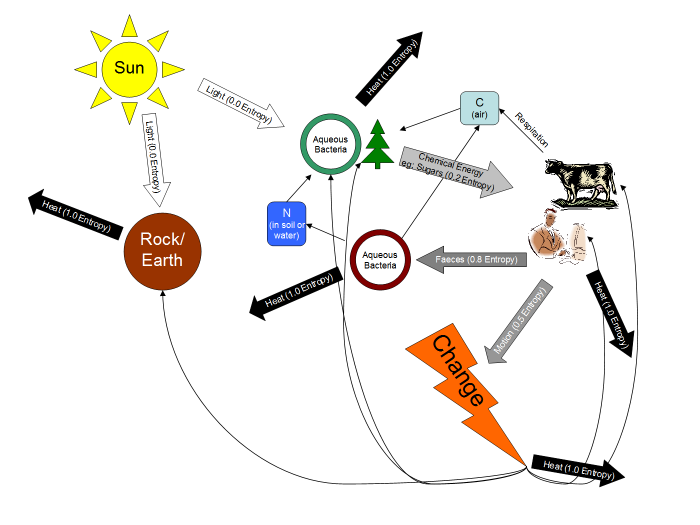

Put more simply, every organism, through habit, engages with its surroundings. It causes inforenergy (I call it this to emphasise the point that all energy carries with it information, and it is by energy flow directed (ie; modulated) by the organism and its surroundings that information is exchanged) to flow from it, through the thing it is interacting with, and back to it. Always the inforenergy gains entropy by doing this ie; becomes more disorganised, or representative of more connections between the Universe, or simply it carries more information than before. Therefore, one could measure the system's health by calculating how much entropy that system consumes, and how widely that entropy consumed is passed on to other things. The overall benefit to the human rewarding their interaction is of course how much raw (low entropy) inforenergy produced by the expended inforenergy is returned to the human - most obviously through eating a tended crop, but less obviously through satisfaction from improved interaction with nature.

As an example, consider a typical field in the EU. Fertiliser and water is regularly applied by the farmer to the field, expending inforenergy previously accumulated by the farmer as well as those systems which produced the fertiliser and water. Now if you apply a large amount of say nitrogen, most of which is currently derived from oil (and might I add, we humans now contribute as much nitrogen to the biosphere as the biosphere itself does so we have effectively doubled it), this removes a limit to growing faster and more plentifully which increases the raw inforenergy harvested by the crop from the sun. One could view this application of nitrogen as equivalent to the use of a tool by a human - a magnifying effect, something which Tn is precisely designed to maximise. You can read all about that on the Tn webpage and in more general terms about computer software itself. We therefore have just equivocated organic action as a tool which either magnifies or diminishes the action of some other process - chemists would call it a catalyst. The invention of new improved tools is equivalent to technological progress and as we know, bacteria are far more inventive than humans.

However to consider it from the negative direction, synthetic fertiliser is more or less pure nitrogen, so you remove a food source normally present in say animal faeces. This prevents beetles etc. from being able to consume their own entropy, thus lowering the total entropy consumed. Obviously, it helps even less if you use pesticides which kill almost all the insect life. We know from research that the biodiversity of the soil is diminishing since we started doing this - so over time, the soil is becoming sicker and we have to pile on more fertiliser and insecticides to get the same yield. Here our magnifying effect is having a second order effect of damaging nature's magnifying effect because we are replacing it rather than supplementing nature's own magnifying process.

By the Santiago Theory of Cognition and Gaia Theory, we should view any ecosystem as a cognitive network. This mind learns through trial and error and contains memory, just like a human. It learns most obviously how to regulate itself, but also how to defend itself against bad weather, pests, too many animals eating it, too much water, too little water etc. Much like an immune system, too much stress can cause an overreaction which further damages the ecosystem eg; too much nitrogen entering rivers causes an immune response of algae production which in turn starves the water of oxygen, killing everything else. Immune systems, along with bacteriological function and the system described above should offer us a good guide on how to reduce all to the same, common principles which guide them all. It is like this our model should behave.

So to sum up, clearing a forest and adding irrigation and fertiliser can improve an ecosystem's contribution to the overall good past that which a forest alone could achieve. In this, other life such as trees are actually the same as humans because while they consume so much light that most plants die off, they create a more dependable supply of water through the absorption and reemission of water (hence why forests are more humid) and generate a great deal of fertiliser - a greater magnifying effect on raw inforenergy gathering than say grasses or fungi. We therefore have reduced human action into a form no different than any other life, which is the requirement.

I stopped for lunch after writing the first draft of this - it is now 1.32pm. For lunch I ate bacon, sausages and eggs when it occurred to me that meat is basically densely packed energy when compared to say cabbage. We all know this, which is one reason we tend to favour eating meat like most animals. However, meat might be more concentrated energy, but it's also higher entropy energy than that in cabbage. And that diminishes the amount of entropy we can consume for ourselves as we have fairly fixed requirements for the highest entropy energy we can make use of. Which could explain why those who consume mostly meat and processed foods are not as healthy as those who consume mostly fresh fruit and vegetables. Put simply, the quality of energy is as important as the quantity of energy we consume. This is very like the distinction I wish to introduce to money by divorcing the money supply - productive money is qualitatively very different from revenue money.

How the hell do you model this though? Obviously you could have energy objects being passed around between ecosystem subsystems, however by definition these must subdivide rapidly as they are dispersed and gain entropy. This implies an exponentially rising complexity, which means it's computationally infeasible. I need some way of representing diversity which does not involve a O(Nx) complexity requirement.

Like I'm sure most Westerners, and I'm pretty sure most Easterners too, it is rather hard to think in terms of infinities. Neither mystical tradition does much to let us grapple with them directly in terms of creating cold, hard organised maths (ie; computer code) especially when programming is all about thinking in terms of managing limits (limits of processing power, memory and of course finite programmer time). On the other hand, the Eastern philosophy of describing these things are undescribable isn't sufficient, and I know it's incorrect as Western philosophy has shown so successfully that complex systems can indeed be reduced to simple models. I am convinced that by stepping outside the mental box, it is possible to model an ecosystem without these complexity issues becoming problematic - it just requires enough thought.

So, I'm kinda back to the drawing board - though I'm pretty sure

magnifying effects of tools are the key just like they are in Tn. Let's hope

my subconscious mind has a few more ideas left in it. I definitely know it

has something to do with my coffee dates ...

![]() Be happy!

Be happy!

Monday 17th July 2006: 10.30am. Ach, I have started this entry every single morning since the last entry, but I keep making sufficient progress each day to render what I wrote the previous day incorrect at best! Basically, after the last entry a friend of mine emailed me to suggest I go look at Thermal Physics and Statistical Mechanics to see how entropy and energy are treated there. Also, as you might have noticed in the graph in the previous entry, I had pulled entropy gain figures from nowhere and the real ratios need to be based on empirical evidence - so basically, the last three days or so have mostly been spent on the internet doing research. This entry summarises that research.

Sadly, the figures for how the biosphere works are not very reliable. Put simply, no one really was too bothered about how much carbon flows around the biosphere until very recently (literally the last ten years) when global warming became an issue. Even the simple calculation of how much stuff is grown across the entire planet by all life is not reliable. However, what my model requires is not absolute figures, but rather ratios between processes.

Before I start, I should add a major caveat: when we speak of "energy", and I include science here, we usually don't mean energy literally. If one says that a light bulb consumes 20W of energy (W = J/sec), you have to bear in mind that by the first law of thermodynamics, no energy can be created nor destroyed, so therefore the bulb does not consume energy at all - rather, it consumes quality (ie; generates entropy). The amount of energy that goes into the bulb is precisely the same as the amount that comes out, but its quality is significantly lower - electricity, a low entropy energy, is converted to light and heat.

Science handles this by talking about Gibbs free energy, which is the "useful" amount of energy in a system and it's also denoted in Joules. I personally think this is a rather confusing way of viewing quality of energy, but having seen the history of how they originally discovered entropy, I can see why. Put simply, they discovered at the end of the 19th century that not all energy is alike - some can do work and some can not - so they termed the difference by quantifying the effect of converting the Gibbs free energy into heat. In other words, you get this number representing quality of energy by working out how much work is left in it on the basis that performing work eventually generates heat - or put another way, the absolute "temperature" of the energy directly determines its quality (I put temperature in quotes, because for entropy calculations you would treat glucose as containing sunlight radiated at 5500K even though the glucose itself might be at 300K).

That seems sensible, however it assumes a linear functioning of the world. If you take a human's entropy consumption - say a human requires 100W. Now, almost all processes inside a human occur at 37C=310K so that generates 0.323 J/K/sec of entropy. However, the human will live in a 20C=293K environment, so in reality it generates 0.341 J/K/sec of entropy. So where did the missing 0.0187 J/K/sec go? As far as I can see it, it goes on "organising relations" - the 0.323 J/K/sec will keep the human at 37C and provide all life functioning, so the extra entropy must be being sunk into our effect on the biosphere. If this sounds like a ludicrous interpretation of the science, think of it this way - why treat light from the sun as having low-entropy? Because the Boltzmann relation makes entropy inverse to absolute temperature. Therefore, I can see no reason why mammals, being warmer than their surroundings, are not also sources of low-entropy to the biosphere. Indeed, all life works at a temperature slightly warmer than their surroundings through converting energy to heat, so it would seem that our excessively higher temperature than our surroundings has everything to do with increasing the entropy we can generate relative to our surroundings. I wonder if that has anything to do with temperate regions generating more civilisations than hot regions?

If you were to take the entire planet, and reduce the entire lot to heat, you would satisfy the scientific interpretation of entropy. However, life can and does continually lock up entropy within itself over time and unless you reckon all life on the planet is going to suddenly stop its 3.5bn years of continuity, the scientific view of entropy doesn't help us. Basically, it's too linear and too based on everything having an equilibrium - namely, absolute zero. Anyway, bear in mind in the subsequent discussion that when I talk of energy, I am really talking about useful energy, ie; the Gibbs free energy or entropy generable from that quality of energy.

Right, onto some hard facts about our world! The Solar Constant, the amount of energy from the sun reaching the visible Earth, is roughly 1366 W/m2 with a +-0.1% by solar variation. As the Earth's total surface is around 1.274e14 (127,400,000,000,000) m2, this means 1.74e17 (174,000,000,000,000,000) W reaches the planet. When taken across the entire planet rather than just the visible portion from the sun, this becomes roughly 340 W/m2. Now, the reflectivity (albedo) of the planet is around 30%, so that much is immediately reflected out unchanged leaving 70% actually entering the biosphere, which is 235 W/m2 which equals 1.2e17 (120,000,000,000,000,000) W.

The fundamental reaction of photosynthesis is 6CO2 + 12H2O + 56 photons → C6H12O6 + 6O2 + 6H2O. It's actually a lot more complicated than that, but that's what it ends up as. This costs 8 photons of the 56 in entropy generation leaving 48, so 48 photons end up in each glucose (eight on each carbon). Animals and plants metabolise the glucose by C6H12O6 + 6O2 + 6H2O → 6CO2 + 12H2O costing 32 photons in entropy generation, leaving 16 photons for the animal or plant to use. 16 photons is only 28.5% of the original, so there is a maximum efficiency of 28.5% for photosynthesis. The other 71.5% goes into heat. The poor efficiency of 85.7% of glucose creation is the result of a lack of atmospheric oxygen for the first two billion years of life on the planet - C3 Photosynthesis, by chloroplasts (primitive organisms like mitochondria) who evolved before atmospheric oxygen became common, uses the enzyme Rubisco which unfortunately likes to attach to O2 as well as CO2 so considerable energy (about half) is wasted with current atmospheric CO2 concentrations when generating the glucose.

It however gets worse! According to the paper "Entropy Versus Information: Is A Living Cell A Machine Or A Computer?" by J.A. Tuszynski, around half of the energy a cell consumes goes on ion pumping across its membrane which makes up 60% of its mass. Transmitting DNA only takes about 1/1000th of its energy. Put simply, there are substantial physiological constraints - you can expect the large majority of energy received from the sun to expended on chemical processes. We'll come back to this shortly.

Back to the biosphere, only half of energy received on the ground is photosynthetically active radiation (the blue and red components of light, which is why most plants are green) which means we now have 6e16 W left. 28.5% of that is 1.71e16 W which is the maximum possible energy available to life through photosynthesis, only 14.25% of the total for the biosphere. We also know that on average plants must expend 40% of their energy on respiration which leaves 60% for biomass production - though in rainforests, tall trees can consume up to 75% of their energy on respiration (mainly lifting water from the ground). This leaves just 1.026e16 W for biomass production, or just 8.55% of the total for the biosphere.

At this point, the figures get a bit more hazy, mainly because no one really started to think about them until the last twenty years or so when global warming became an issue. The best estimate for total biomass production of all plant life on the planet is 9.51e13 W which means they must have consumed 1.585e14 W of glucose. To generate this much glucose requires 5.561e14 W of light to be absorbed, which is 0.927% of the total possible if the entire planet were covered totally by plant life. This isn't as bad as it looks - a lot of the planet is not covered in life, say due to lack of water or too high or low a temperature. A lot of plants such as trees have growing seasons so they only grow for half the year at most. It is said that even where foliage is dense, only 75% of the photosynthetically active light is actually used eg; due to leaves being thin, or gaps between branches. Even in a forest, you often find plants on the forest floor. If you account for that, it becomes 1.24% which looks reasonable. In theory, you could grow the sustainable energy available to humans fifty fold by doing no more than planting plants.

This is what current human energy consumption looks like:

| Percent | Overall | Per Capita | |

| Total Consumption | 100.0% | 12,700,000GW | 2112.5W |

| - Oil | 32.0% | 4,100,000GW | 676.0W |

| - Solid Fuels | 23.0% | 2,900,000GW | 485.9W |

| - Natural Gas | 19.0% | 2,400,000GW | 401.4W |

| - Biofuels | 11.5% | 1,500,000GW | 242.9W |

| - Nuclear | 6.0% | 760,500GW | 126.8W |

| - Hydro | 6.0% | 760,500GW | 126.8W |

| - Food | 2.5% | 316,900GW | 52.8W |

| Sustainable (est. as coal may be in solid fuels) | 43.0% | 5,500,000GW | |

| Non-sustainable (est. as coal may be in solid fuels) | 57.0% | 7,200,000GW | |

| Direct Human Use Of Total Planet Biomass Production | 4.93% | ||

| Total Human Use Of Total Planet Biomass Production | 60.7% |

Now that 4.93% is direct use and wouldn't include indirect use. The 90/10 rule (Miller, G.T., Energetics, Kinetics and Life, Wadsworth 1971) means that each step in a food chain releases only 10%-20% of its energy to the next step. So, if I eat a pig, it needs to eat five to ten times the amount of energy I gain from eating the pig. Anything it eats needs to eat another five to ten times the energy the pig gains. Put another way, eating meat gives you one fifth to one tenth of the entropy eating grain does. Therefore, the maximum sustainable total human use of planet biomass production, excluding technological mechanisms, can be 10% or 9.51e12 W. This would correspond to us all eating nothing but grain and not feeding our grain to animals in order to eat them which we currently do to the tune of 40% of all our food production, which effectively means we throw away 40% of our food as most herbivores are perfectly capable of eating things we can't like grass. If you do employ technological measures such as collecting camel dung and burning it for fuel, you can increase this proportion.

It turns out that we now consume 35.4kg of meat per person and 19kg of fish per person. If only three quarters of that actually makes it into our diet, that means 37.7% of human food consumption is either meat or fish (this seems a little high, but it is possible given China and India's economic take off). Using our 90/10 rule, that increases our demand on plant biomass of that 37.7% by 3.77 times. However, most fish we eat eat other fish never mind those who eat meat eaters (eg; bush meat in Africa, or us feeding old cows to new cows), so we can apply the rule again to one third of that 37.7%. This gives us a scaling factor of 32.639 on the 37.7% of protein consumption, which gives us a total cumulative use of planet biomass production of 60.7%. If I had more accurate information, you would probably find that is a gross underestimate.

To contextualise that figure, here are some more hard facts: 75% of available fresh water, 35% of available land and 20% of all energy resources are currently used for food production. To produce 1 kg of animal protein, 3 to 10 kg of plant matter is required depending on the particular animal species and circumstances (however, plant matter is not as energy dense as meat). 1 kg of beef requires 15 m3 water, one kg of lamb requires 10 m3 , while for one kg of grain 0.4 to 3 m3 is sufficient.

Brand & Melman calculate that 1kg of beef requires a fossil energy input of 15.5 MJ/kg, poultry meat 18.1 MJ/kg, pork 18.9 MJ/kg, and veal production a massive 46.8 MJ/kg - bear in mind that protein yields the consumer only 16.8MJ/kg. The worst they found was farming catfish which took 34 times as much fossil energy that the catfish protein was worth to the consumer. Currently corn and barley produce about 5 times as much food energy as they use in terms of fossil energy, but this is rapidly getting worse as soil productivity drops and more artificial fertiliser is needed.

Energy density of some substances will be useful. As we saw before, there are 16 photons in glucose which gives it an energy density of 16MJ/kg. Bread has 10.67MJ/kg, Grass 11MJ/kg, Flour 14.23MJ/kg, Wood 15MJ/kg, Meat 16.8MJ/kg, Sugar 16.96MJ/kg, Dried Fruit & Nuts 19MJ/kg, Nuts 27.53MJ/kg, Coal 28MJ/kg, Alcohol 30.5MJ/kg, Vegetable Fats 37MJ/kg, Animal Fats 37.8MJ/kg, Crude Oil 42MJ/kg, Petrol 48.2MJ/kg and Gas 55MJ/kg. The thing that surprised me the most was how little difference there was between meat and sugar, though of course sugar is a great deal more digestible. I suppose atomic matter is the most dense of all, being 89,875,543,056MJ/kg. Just as a comparative, the pizza I'm about to eat has an energy density of 10MJ/kg and all alone contains more energy than I need for the entire day.

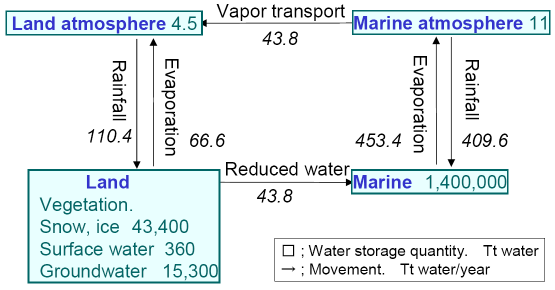

A good proportion of the energy entering the biosphere is expended on the water cycle, without which life could not survive:

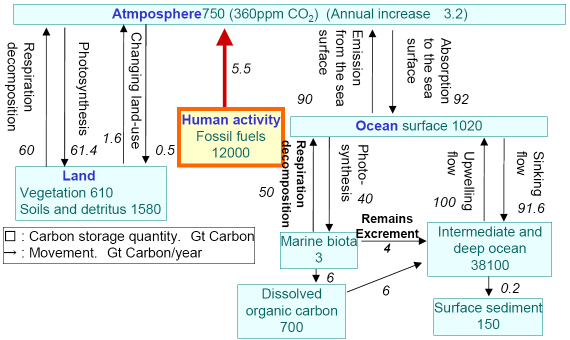

I pulled these graphs from an OECD report so they're probably fairly reliable. I make 1Tt equal to 1e12 (1,000,000,000,000) kg, so 520e12 kg of water moves around each year. To shuffle all that around the planet is difficult to calculate - vaporising it requires water's latent heat of vaporisation, and then simply shifting it around the planet given the weight would consume a great deal as well. We do know the average period of time water stays in the atmosphere is ten days. Now the carbon cycle:

I make 1Gt equal to 1e9 kg. From the graph above, 101.4e9kg of carbon moves around by photosynthesis each year, with the average period in the atmosphere being five years.

Refs:

- http://morgan.botany.uga.edu/btny1210/syllabus/lecphotosyn.htm

- http://www.physics.hku.hk/~tboyce/sfseti/12entropy.html

- http://star.tau.ac.il/~eshel/Bio_complexity/11.%20Swarming%20Intelligence/ATP-Information.pdf

- http://www.entropysimple.com/content.htm

- http://www.rwc.uc.edu/koehler/biophys.2ed/entropy.html

- http://www.oecd.org/dataoecd/12/53/36746578.pdf

- http://www.hsc.wvu.edu/sop/compchem/mdpi/entropy/papers/e3030116.pdf

- http://www.mi.uni-hamburg.de/fileadmin/files/forschung/theomet/docs/pdf/kleifrae05.pdf

- http://www.physics.ox.ac.uk/users/jelley/ESoceanbiomass06.ppt

- http://www.oup.co.uk/pdf/bt/echem/chapter04.pdf

I think I have enough now to start my model. I'm sure I'll let you all know how it goes! Be happy!

Tuesday 25th July 2006: 12.34pm.

Tis the day before visiting Johanna in Sweden! I have been taking a break

for the last few days because on Thursday I came down with a migraine which

slowly got better on the subsequent days. I had been working on a pretty

massive entry which actually specified out the basic rules of interaction in

the model, but I should emphasise that there are one hell of a lot of

permutations. The problem is that my subconscious mind is rather keen on the

model, so during the migraines if I could get it to stop thinking, the pain

vanished. Thankfully, as I am now older I know how to distract my

subconscious though of course, it's always temporary and little insights

about the model keep popping into my conscious mind when I relax. I kinda

feel like the guy from that movie Pi!

![]() During my few

days of taking a break, I caught up on a number of things I needed to get

done such as the

Future Society website.

During my few

days of taking a break, I caught up on a number of things I needed to get

done such as the

Future Society website.

However, I do know I am now on the right track. It's actually a classic case of reductionism, and if anything causes it to not work in practice, it will be that. In the end though, you are trying to model infinities - infinite detail, infinite history etc. and these really don't gel well with computers. Instead one must aim for something which cancels out the infinities such that on average, the loss of precision is not important. Hence one must make certain assumptions, such as that human knowledge will always improve in the long run (which isn't guaranteed, but there is a very high probability).

The

model has changed substantially since the start of the summer. Then I had

envisaged model cows, model humans, model trees and such. No longer - the

model I have in mind now is what I think is common for every stage of

emergent order - indeed it is how the order emerges in the first place.

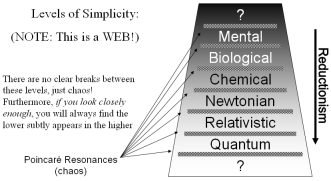

On the right I have a graph which I designed originally for my Burn

presentation at the Philosophy Retreat in 2005. Most people think it is

confusing, but it's very simple. At the quantum level, as at all levels, you

have nothing more nor nothing less than a set of relationships. The emergent

property of that is the macroworld we see around us. The emergent property

of the macroworld is chemicals and chemistry. They in turn have the emergent

property of life. Life then in turn has the emergent property of mind. At

each stage, both the lower and higher stages are present but less strongly

so really it's a web rather than a hierarchy. For example, you can

readily observe chemical effects on the mind, but you can also observe the

effects of the mind on chemicals. This is the effect of entropy to entropy

conversion whereby low-entropy energy is converted to higher-entropy energy

in order to maintain and grow information structures which are continually

pushed towards lower levels of (information) entropy.

The

model has changed substantially since the start of the summer. Then I had

envisaged model cows, model humans, model trees and such. No longer - the

model I have in mind now is what I think is common for every stage of

emergent order - indeed it is how the order emerges in the first place.

On the right I have a graph which I designed originally for my Burn

presentation at the Philosophy Retreat in 2005. Most people think it is

confusing, but it's very simple. At the quantum level, as at all levels, you

have nothing more nor nothing less than a set of relationships. The emergent

property of that is the macroworld we see around us. The emergent property

of the macroworld is chemicals and chemistry. They in turn have the emergent

property of life. Life then in turn has the emergent property of mind. At

each stage, both the lower and higher stages are present but less strongly

so really it's a web rather than a hierarchy. For example, you can

readily observe chemical effects on the mind, but you can also observe the

effects of the mind on chemicals. This is the effect of entropy to entropy

conversion whereby low-entropy energy is converted to higher-entropy energy

in order to maintain and grow information structures which are continually

pushed towards lower levels of (information) entropy.

There obviously will be loads more about all this next entry when I have finished it, including a laborious explanation of that last sentence! The entry I was working on before the migraines went on for some number of pages. However, the main point is that the model I now envisage can equally model mind, biology, chemistry, classical and quantum physics. It could model an economy as easily as the management structures within a large multinational corporation. Of course, what useful things it can tell you at such an abstract stage would be very limited, but that's where I would like to get by the end of the summer.

Later on, you could then 'stamp' it with an actual application. The quality I love most about this reformed model is that it completely varies according to how you want to look at it. It could be a model of how human ideologies change over time equally as being a model of plant diversity in your garden. This quality exactly maps to reality at the quantum level where you cannot leave out the observer and how the observation is made. As Gregory Bateson quite correctly pointed out, as soon as a human observes something, that observation includes what the human expected to see, their emotional state at the time, all their experience and history up until that point as well as all the experience and history their inherited off their parents and society etc. Wonderful stuff IMHO!

How you stamp it with an application I reckon is by doing to the actors what everything does to itself in Nature: fix the rules for how the actors perceive one another. This rules out those rules evolving on their own as Nature would intend, but it would be good for short runs of the model which is all that is computationally feasible right now anyway. I think that will come sometime after this summer. The other part of stamping it would involve writing a perceptual filter which basically translates what the model is doing into a format which relates to the application we are putting it to eg; a 'group' in the model is better called a 'team' if modelling managerial structures, but better called a 'firm' if modelling economies.

Right well that's me done for now. You'll probably hear of me again this time next week when I'll be back from Sweden. Be happy!

| Go to previous entry | Go to next entry | Go back to the archive index | Go back to the latest entries |